Deep Learning

The term, Deep Learning, refers to training Neural Networks, sometimes very large Neural Networks. So what exactly is a Neural Network?

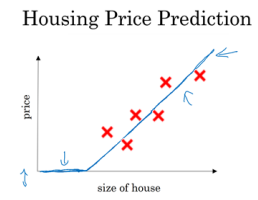

Let's start to the Housing Price Prediction example. Let's say you have a data sets with six houses, so you know the size of the houses in square feet or square meters and you know the price of the house and you want to fit a function to predict the price of the houses, the function of the size.

So if you are familiar with linear regression you might say let's put a straight line to these data so and we get a straight line like that.

But we know that prices can never be negative. So instead of the straight line fit which eventually will become negative, let's bend the curve here.

So it just ends up zero here (as indicated by blue arrows). So this thick blue line ends up being your function for predicting the price of the house as a function of its size.

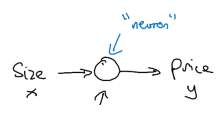

So you can think of this function that you've just fit the housing prices as a very simple neural network. It's almost as simple as possible neural network. Let us draw it here.

We have as the input to the neural network the size of a house which one we call x. It goes into a node which is a little circle and then it outputs the price which we call y. So this little circle, which is a single neuron in a neural network, implements this function that we drew on the left.

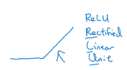

And all the neuron does is it inputs the size, computes the below linear function, takes a max of zero, and then outputs the estimated price.

In the neural network literature, this function is called a ReLU function which stands for rectified linear units. Rectify just means taking a max of 0 or the quantity whichever is higher.

This is a single neuron, a larger neural network is then formed by taking many of the single neurons and stacking them together.

So, if you think of this neuron that's being like a single Lego brick, you then get a bigger neural network by stacking together many of these Lego bricks. Let's see an example.

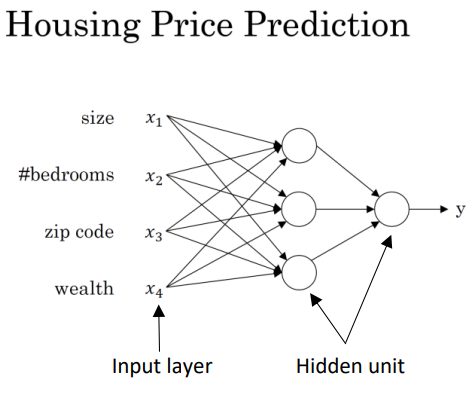

Let’s say that instead of predicting the price of a house just from the size, you now have other features. You know other things about the host, such as the number of bedrooms, size in square feet or square meters and zip codes.

So each of these features can be little circles that are given as input to the neural network.

So in the example x is all of these four inputs. And y is the price you're trying to predict.

And so by stacking together a few of the single neurons or the simple predictors we have from the previous slide, we now have a slightly larger neural network.

How you manage neural network is that when you implement it, you need to give it just the input x and the output y for a number of examples in your training set and all this things in the middle, they will figure out by itself.

So what you actually implement is this. Where, here, you have a neural network with four inputs.

So the input features might be the size, number of bedrooms, the zip code or postal code, and the wealth of the neighborhood.

And so given these input features, the job of the neural network will be to predict the price y.

And notice also that each of these circle in the middle layers are called hidden units in the neural network, that take as inputs all four input features.

We say that layers that this is input layer and this layer in the middle of the neural network are density connected.

Because every input feature is connected to every one of these circles in the middle.

And the remarkable thing about neural networks is that, given enough data about x and y, given enough training examples with both x and y, neural networks are remarkably good at figuring out functions that accurately map from x to y.

So, that's a basic neural network. It turns out that neural networks, are most useful, most powerful in supervised learning incentives