Gradient descent with momentum

There's an algorithm called momentum, or gradient descent with momentum that almost always works faster than the standard gradient descent algorithm.

In one sentence, the basic idea is to compute an exponentially weighted average of your gradients, and then use that gradient to update your weights instead.

In this section, let's unpack that one sentence description and see how you can actually implement this.

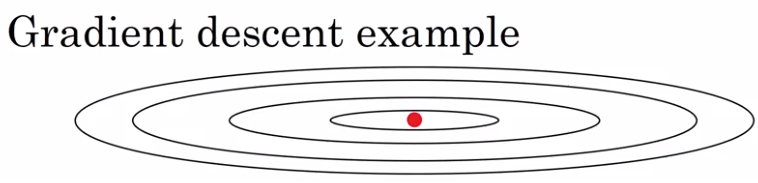

As a example let's say that you're trying to optimize a cost function which has contours like this. So the red dot denotes the position of the minimum.

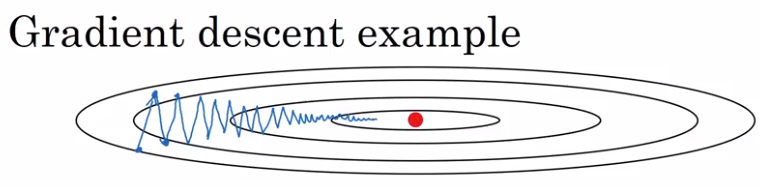

Maybe you start gradient descent here and if you take one iteration of gradient descent and then another step, another step, and so on. And you see that gradient descents will sort of take a lot of steps and Just slowly oscillate toward the minimum.

And this up and down oscillations slows down gradient descent and prevents you from using a much larger learning rate.

In particular, if you were to use a much larger learning rate you might end up over shooting and end up diverging.

And so the need to prevent the oscillations from getting too big forces you to use a learning rate that's not itself too large.

Another way of viewing this problem is that on the vertical axis you want your learning to be a bit slower, because you don't want those oscillations. But on the horizontal axis, you want faster learning. because you want it to aggressively move from left to right, toward that minimum, toward that red dot. This can be achieved with gradient descent with momentum.

So here's what you can do if you implement gradient descent with momentum.

On each iteration, or more specifically, during iteration t you would compute the usual derivatives dw, db.

And then what you do is you compute vdW to be Beta vdw plus 1 minus Beta dW. \( V_{dW} = \beta V_{dw} + (1 - \beta)dW \\ V_{db} = \beta V_{db} + (1 - \beta)db \)

So this is similar to when we're previously computing the \( V_1 = 0.98V_0 + 0.02\theta_1 \)

So it's computing a moving average of the derivatives for w.

And then you similarly compute vdb equals that plus 1 minus Beta times db.

And then you would update your weights using W gets updated as W minus the learning rate times, instead of updating it with dW, with the derivative, you update it with vdW. And similarly, b gets updated as b minus alpha times vdb. So what this does is smooth out the steps of gradient descent.

\( W := W - \alpha * V_{dW} \\ b := b - \alpha * V_{db} \)

Using this method, you find that the oscillations in the vertical direction will tend to average out to something closer to zero.

So, in the vertical direction, where you want to slow things down, this will average out positive and negative numbers, so the average will be close to zero.

Whereas, on the horizontal direction, all the derivatives are pointing to the right of the horizontal direction, so the average in the horizontal direction will still be pretty big.

So that's why with this algorithm, with a few iterations you find that the gradient descent with momentum ends up eventually just taking steps that are much smaller oscillations in the vertical direction, but are more directed to just moving quickly in the horizontal direction.

And so this allows your algorithm to take a more straightforward path, or to damp out the oscillations in this path to the minimum.

Finally, let's look at some details on how you implement this.

Here the algorithm has two hyperparameters of the learning rate alpha, as well as this parameter Beta, which controls your exponentially weighted average.

The most common value for Beta is 0.9.

We're averaging over the last ten days temperature. So it is averaging of the last ten iteration's gradients.

And in practice, Beta equals 0.9 works very well.

Feel free to try different values and do some hyperparameter search, but 0.9 appears to be a pretty robust value.

And how about bias correction? In practice, people don't usually do this because after just ten iterations, your moving average will have warmed up and is no longer a bias estimate.

So in practice, I don't really see people bothering with bias correction when implementing gradient descent or momentum.