Transfer learning

One of the most powerful ideas in deep learning is that sometimes you can take knowledge the neural network has learned from one task and apply that knowledge to a separate task.

So for example, you could have the neural network learn to recognize objects like cats and then use that knowledge or use part of that knowledge to help you do a better job reading x-ray scans. This is called transfer learning.

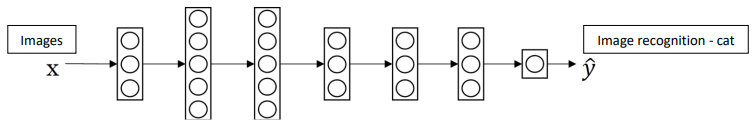

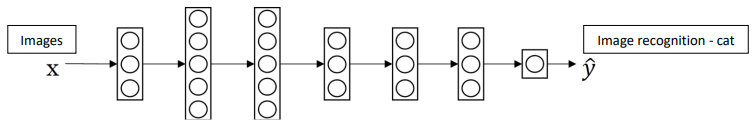

Let's say you've trained your neural network on image recognition. So you first take a neural network and train it on X Y pairs, where X is an image and Y is some object.

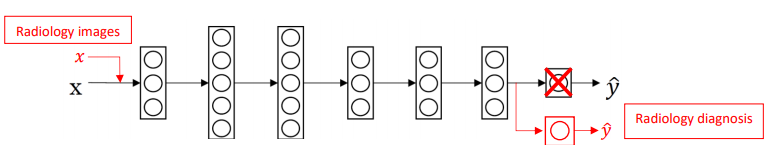

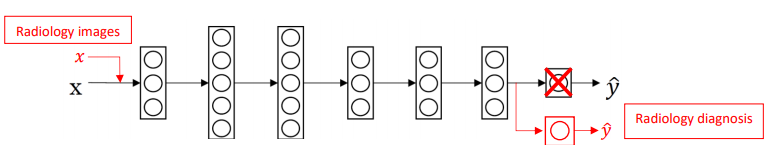

If you want to take this neural network and adapt, or we say transfer, what is learned to a different task, such as radiology diagnosis, meaning really reading X-ray scans, what you can do is take this last output layer of the neural network and just delete that and delete also the weights feeding into that last output layer and create a new set of randomly initialized weights just for the last layer and have that now output radiology diagnosis.

So to be concrete, during the first phase of training when you're training on an image recognition task, you train all of the usual parameters for the neural network, all the weights, all the layers and you have something that now learns to make image recognition predictions.

Having trained that neural network, what you now do to implement transfer learning is swap in a new data set X Y, where now these are radiology images. And Y are the diagnoses you want to predict and what you do is initialize the last layers' weights. And now, retrain the neural network on this new data set, on the new radiology data set. You have a couple options of how you retrain neural network with radiology data.

You might, if you have a small radiology dataset, you might want to just retrain the weights of the last layer, and keep the rest of the parameters fixed.

If you have enough data, you could also retrain all the layers of the rest of the neural network. And the rule of thumb is maybe if you have a small data set, then just retrain the one last layer at the output layer. Or maybe that last one or two layers.

But if you have a lot of data, then maybe you can retrain all the parameters in the network. And if you retrain all the parameters in the neural network, then this initial phase of training on image recognition is sometimes called pre-training, because you're using image recognitions data to pre-initialize or really pre-train the weights of the neural network.

And then if you are updating all the weights afterwards, then training on the radiology data sometimes that's called fine tuning. So you hear the words pre-training and fine tuning in a deep learning context, this is what they mean when they refer to pre-training and fine tuning weights in a transfer learning source.

And what you've done in this example, is you've taken knowledge learned from image recognition and applied it or transferred it to radiology diagnosis. And the reason this can be helpful is that a lot of the low level features such as detecting edges, detecting curves, detecting positive objects. Learning from that, from a very large image recognition data set, might help your learning algorithm do better in radiology diagnosis.

It's just learned a lot about the structure and the nature of how images look like and some of that knowledge will be useful. So having learned to recognize images, it might have learned enough about you know, just what parts of different images look like, that that knowledge about lines, dots, curves, and so on, maybe small parts of objects, that knowledge could help your radiology diagnosis network learn a bit faster or learn with less data.

So, when does transfer learning make sense? Transfer learning makes sense when you have a lot of data for the problem you're transferring from and usually relatively less data for the problem you're transferring to.

So for example, let's say you have a million examples for image recognition task. So that's a lot of data to learn a lot of low level features or to learn a lot of useful features in the earlier layers in neural network. But for the radiology task, maybe you have only a hundred examples. So you have very low data for the radiology diagnosis problem, maybe only 100 x-ray scans. So a lot of knowledge you learn from image recognition can be transferred and can really help you get going with radiology recognition even if you don't have all the data for radiology.

One case where transfer learning would not make sense, is if the opposite was true. So, if you had a hundred images for image recognition and you had 100 images for radiology diagnosis or even a thousand images for radiology diagnosis, one would think about it is that to do well on radiology diagnosis, assuming what you really want to do well on this radiology diagnosis, having radiology images is much more valuable than having cat and dog and so on images. So each example here is much more valuable than each example there, at least for the purpose of building a good radiology system.

So, if you already have more data for radiology, it's not that likely that having 100 images of your random objects of cats and dogs and cars and so on will be that helpful

Because the value of one example of image from your image recognition task of cats and dogs is just less valuable than one example of an x-ray image for the task of building a good radiology system. So, this would be one example where transfer learning, well, it might not hurt but I wouldn't expect it to give you any meaningful gain either.

So to summarize, when does transfer learning make sense? If you're trying to learn from some Task A and transfer some of the knowledge to some Task B, then transfer learning makes sense when Task A and B have the same input X. In the first example, A and B both have images as input. In the second example, both have audio clips as input.

It has to make sense when you have a lot more data for Task A than for Task B. All this is under the assumption that what you really want to do well on is Task B. And because data for Task B is more valuable for Task B, usually you just need a lot more data for Task A because you know, each example from Task A is just less valuable for Task B than each example for Task B.

And then finally, transfer learning will tend to make more sense if you suspect that low level features from Task A could be helpful for learning Task B.

Summary - Transfer learning

Transfer learning refers to using the neural network knowledge for another application.

When to use transfer learning

Task A and B have the same input 𝑥

A lot more data for Task A than Task B

Low level features from Task A could be helpful for Task B

Example 1: Cat recognition - radiology diagnosis

The following neural network is trained for cat recognition, but we want to adapt it for radiology diagnosis.

The neural network will learn about the structure and the nature of images. This initial phase of training on image recognition is called pre-training, since it will pre-initialize the weights of the neural network.

Updating all the weights afterwards is called fine-tuning.

For cat recognition

Input x : image

Output y – 1: cat, 0: no cat

Radiology diagnosis

Input x : Radiology images – CT Scan, X-rays

Output y : adiology diagnosis – 1: tumor malign, 0: tumor benign

Guideline

Delete last layer of neural network

Delete weights feeding into the last output layer of the neural network

Create a new set of randomly initialized weights for the last layer only

New data set (x, y)