Linear regression with multiple variables (Gradient Descent For Multiple Variables) - Introduction

We talked about the form of the hypothesis for linear regression with multiple features or with multiple variables. Let's talk about how to fit the parameters of that hypothesis. In particular let's talk about how to use gradient descent for linear regression with multiple features.

To quickly summarize our notation, this is our formal hypothesis in multivariable linear regression where we've adopted the convention that \( x_0 = 1 \).

The parameters of this model are theta0 through theta n, but instead of thinking of this as n separate parameters, which is valid, I'm instead going to think of the parameters as theta where theta here is a n+1-dimensional vector.

So I'm just going to think of the parameters of this model as itself being a vector. Our cost function is J of theta0 through theta n which is given by this usual sum of square of error term.

But again instead of thinking of J as a function of these n+1 numbers, I'm going to more commonly write J as just a function of the parameter vector theta so that theta here is a vector.

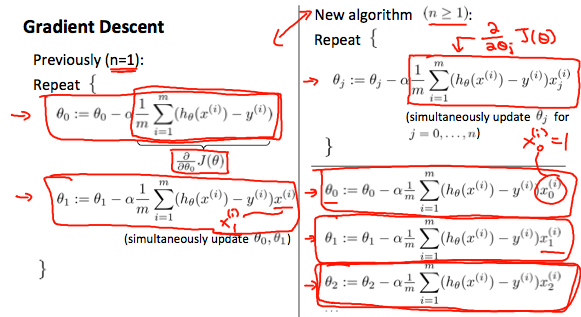

So gradient descent looks like below :

\begin{align*} & \text{repeat until convergence:} \; \lbrace \newline \; & \theta_0 := \theta_0 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_0^{(i)}\newline \; & \theta_1 := \theta_1 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_1^{(i)} \newline \; & \theta_2 := \theta_2 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_2^{(i)} \newline & \cdots \newline \rbrace \end{align*}

We're going to repeatedly update each parameter theta j according to theta j minus alpha times this derivative term. And once again we just write this as J of theta, so theta j is updated as theta j minus the learning rate alpha times the derivative, a partial derivative of the cost function with respect to the parameter theta j.

Let's see what this looks like when we implement gradient descent and, in particular, let's go see what that partial derivative term looks like. Here's what we have for gradient descent for the case of when we had N=1 feature.

We had two separate update rules for the parameters theta0 and theta1, and hopefully these look familiar to you. And this term here was of course the partial derivative of the cost function with respect to the parameter of theta0, and similarly we had a different update rule for the parameter theta1.

There's one little difference which is that when we previously had only one feature, we would call that feature x(i) but now in our new notation we would of course call this x(i)1 to denote our one feature. So that was for when we had only one feature.

Linear regression with multiple variables - Gradient Descent in Practice - Feature Scaling

We can speed up gradient descent by having each of our input values in roughly the same range. This is because \( \theta \) will descend quickly on small ranges and slowly on large ranges, and so will oscillate inefficiently down to the optimum when the variables are very uneven.

The way to prevent this is to modify the ranges of our input variables so that they are all roughly the same. Ideally: \( −1 \leq x_{(i)} \leq 1 \) or \( −0.5 ≤ x_{(i)} \leq 0.5 \)

These aren't exact requirements; we are only trying to speed things up. The goal is to get all input variables into roughly one of these ranges, give or take a few.

Two techniques to help with this are feature scaling and mean normalization.

Feature scaling involves dividing the input values by the range (i.e. the maximum value minus the minimum value) of the input variable, resulting in a new range of just 1.

Mean normalization involves subtracting the average value for an input variable from the values for that input variable resulting in a new average value for the input variable of just zero.

To implement both of these techniques, adjust your input values as shown in this formula:

\( x_i := \dfrac{x_i - \mu_i}{s_i} \)

Where \( \mu_i \) is the average of all the values for feature (i) and \( s_i \) is the range of values (max - min), or \( s_i \) is the standard deviation.

Note that dividing by the range, or dividing by the standard deviation, give different results. The quizzes in this course use range - the programming exercises use standard deviation.

For example, if \( x_i \) represents housing prices with a range of 100 to 2000 and a mean value of 1000, then, \( x_i := \dfrac{\text{price}-1000}{1900} \).

Linear regression with multiple variables - Gradient Descent in Practice - Learning Rate

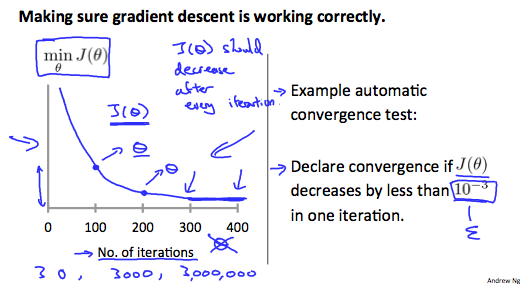

Debugging gradient descent. Make a plot with number of iterations on the x-axis. Now plot the cost function, J(θ) over the number of iterations of gradient descent. If J(θ) ever increases, then you probably need to decrease α.

Automatic convergence test. Declare convergence if J(θ) decreases by less than E in one iteration, where E is some small value such as 10−3. However in practice it's difficult to choose this threshold value.

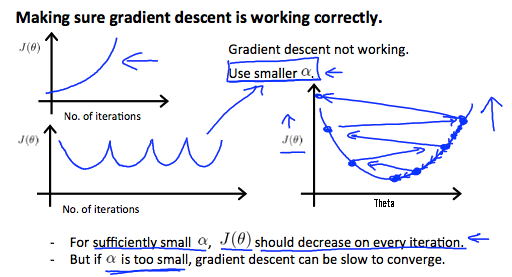

It has been proven that if learning rate \( \alpha \) is sufficiently small, then J(θ) will decrease on every iteration

To summarize:

If \( \alpha \) is too small: slow convergence.

If \( \alpha \) is too large: may not decrease on every iteration and thus may not converge.

Linear regression with multiple variables - Features and Polynomial Regression

We can improve our features and the form of our hypothesis function in a couple different ways.

We can combine multiple features into one. For example, we can combine \( x_1, x_2 \) into a new feature \( x_3 \) by taking \( x_1 * x_2 \).

Polynomial Regression

Our hypothesis function need not be linear (a straight line) if that does not fit the data well.

We can change the behavior or curve of our hypothesis function by making it a quadratic, cubic or square root function (or any other form).

For example, if our hypothesis function is \( h_\theta(x) = \theta_0 + \theta_1 x_1 \) then we can create additional features based on \( x_1 \), to get the quadratic function \( h_\theta(x) = \theta_0 + \theta_1 x_1 + \theta_2 x_1^2 \) or the cubic function \( h_\theta(x) = \theta_0 + \theta_1 x_1 + \theta_2 x_1^2 + \theta_3 x_1^3 \).

In the cubic version, we have created new features \( x_2 \) and \( x_3 \) where \( x_2 = x_1^2 \) and \( x_3 = x_1^3 \)

To make it a square root function, we could do: \( h_\theta(x) = \theta_0 + \theta_1 x_1 + \theta_2 \sqrt{x_1} \)

One important thing to keep in mind is, if you choose your features this way then feature scaling becomes very important.

eg. if \( x_1 \) has range 1 - 1000 then range of \( x_1^2 \) becomes 1 - 1000000 and that of \( x_1^3 \) becomes 1 - 1000000000

Linear regression with multiple variables - Gradient Descent For Multiple Variables - Summary

The gradient descent equation itself is generally the same form; we just have to repeat it for our 'n' features

\begin{align*} & \text{repeat until convergence:} \; \lbrace \newline \; & \theta_0 := \theta_0 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_0^{(i)}\newline \; & \theta_1 := \theta_1 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_1^{(i)} \newline \; & \theta_2 := \theta_2 - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_2^{(i)} \newline & \cdots \newline \rbrace \end{align*}

In other words:

\begin{align*}& \text{repeat until convergence:} \; \lbrace \newline \; & \theta_j := \theta_j - \alpha \frac{1}{m} \sum\limits_{i=1}^{m} (h_\theta(x^{(i)}) - y^{(i)}) \cdot x_j^{(i)} \; & \text{for j := 0...n}\newline \rbrace\end{align*}

The following image compares gradient descent with one variable to gradient descent with multiple variables:

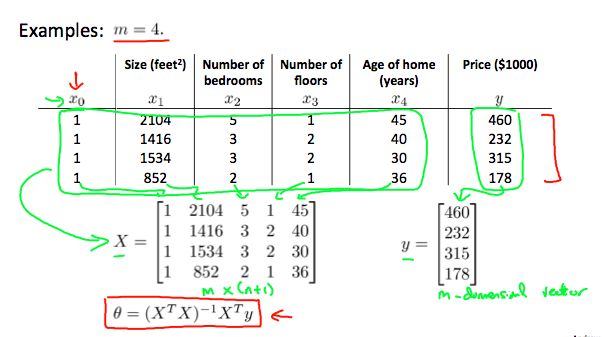

Linear regression with multiple variables - Normal Equation

Gradient descent gives one way of minimizing J. Let’s discuss a second way of doing so, this time performing the minimization explicitly and without resorting to an iterative algorithm.

In the "Normal Equation" method, we will minimize J by explicitly taking its derivatives with respect to the \( \theta_j \) ’s, and setting them to zero. This allows us to find the optimum theta without iteration. The normal equation formula is given below:

\( \theta = (X^TX)^{-1} X^Ty \)

There is no need to do feature scaling with the normal equation.

The following is a comparison of gradient descent and the normal equation:

Gradient Descent Normal Equation Need to choose alpha No need to choose alpha Needs many iterations No need to iterate \( O (kn^2 ) \) \( O (n^3 ) \) , need to calculate inverse of \( X^TX \) Works well when n is large Slow if n is very large With the normal equation, computing the inversion has complexity \( \mathcal{O}(n^3) \). So if we have a very large number of features, the normal equation will be slow. In practice, when n exceeds 10,000 it might be a good time to go from a normal solution to an iterative process.

Normal Equation Noninvertibility

When implementing the normal equation in octave we want to use the 'pinv' function rather than 'inv.' The 'pinv' function will give you a value of \( \theta \) even if \( X^TX \) is not invertible.

If \( X^TX \) is noninvertible, the common causes might be having :

Redundant features, where two features are very closely related (i.e. they are linearly dependent)

Too many features (e.g. m ≤ n). In this case, delete some features or use "regularization" (to be explained in a later lesson).

Solutions to the above problems include deleting a feature that is linearly dependent with another or deleting one or more features when there are too many features.

Programming Assignment

Programming Assignment 1 : Using octave

Programming Assignment 2 : Implement Linear Regression