Deadlocks

In a multi-programming environment, several processes may compete for a finite number of resources. A process requests resources; if the resources are not available at that time, the process enters a waiting state.

Sometimes, a waiting process is never again able to change state, because the resources it has requested are held by other waiting processes.

This situation is called a deadlock

Under the normal mode of operation, a process may utilize a resource in only the following sequence. The request and release of resources are system calls

Request. The process requests the resource. If the request cannot be granted immediately then the requesting process must wait until it can acquire the resource.

Use. The process can operate on the resource

Release. The process releases the resource.

Features that characterize deadlocks

A deadlock situation can arise if the following four conditions hold simultaneously in a system :

Mutual exclusion. At least one resource must be held in a non-sharable mode; that is, only one process at a time can use the resource. If another process requests that resource, the requesting process must be delayed until the resource has been released.

Hold and wait. A process must be holding at least one resource and waiting to acquire additional resources that are currently being held by other processes.

No preemption. Resources cannot be preempted; that is, a resource can be released only voluntarily by the process holding it, after that process has completed its task.

Circular wait. A set { P0, Pl, ... , Pn} of waiting processes must exist such that P0 is waiting for a resource held by P1 , P1 is waiting for a resource held by P2, ... , Pn-1 is waiting for a resource held by Pn and Pn is waiting for a resource held by P0.

Resource-Allocation Graph

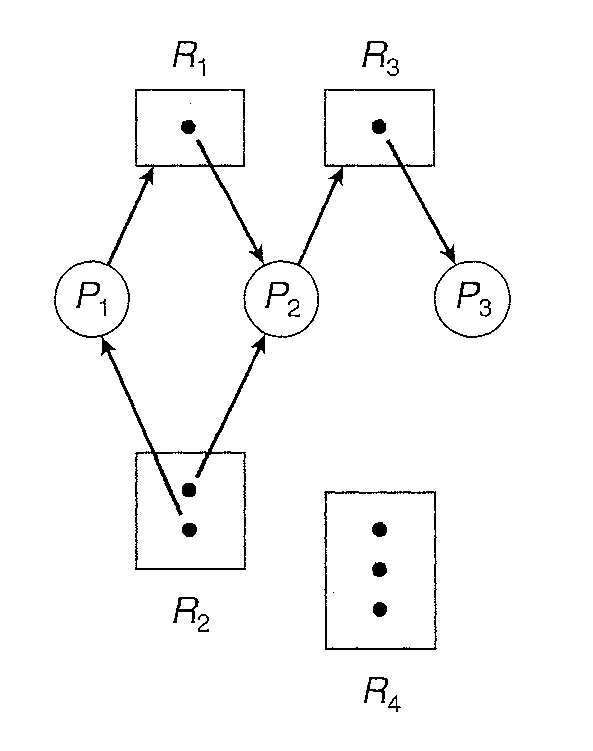

Deadlocks can be described more precisely in terms of a directed graph called a "system resource allocation graph". This graph consists of a set of vertices V and a set of edges E. The set of vertices V is partitioned into two different types of nodes: P = {P1, P2, ... , Pn} the set consisting of all the active processes in the system, and R = {R1, R2, ... , Rm} the set consisting of all resource types in the system.

A directed edge from process Pi to resource type Rj is denoted by Pi -> Rj; (request edge) it signifies that process Pi has requested an instance of resource type Rj and is currently waiting for that resource.

A directed edge from resource type Rj -> Pi (assignment edge) it signifies that an instance of resource type Rj has been allocated to process Pi.

Pictorially we represent each process Pi as a circle and each resource type Rj as a rectangle. Since resource type Rj may have more than one instance, we represent each such instance as a dot within the rectangle. Note that a request edge points to only the rectangle Rj, whereas an assignment edge must also designate one of the dots in the rectangle. When a request can be fulfilled, the request edge is instantaneously transformed to an assignment edge

If the graph contains no cycles, then no process in the system is deadlocked. If the graph does contain a cycle, then a deadlock may exist. Each process involved in the cycle is deadlocked. In this case, a cycle in the graph is both a necessary and a sufficient condition for the existence of deadlock.

If each resource type has several instances, then a cycle does not necessarily imply that a deadlock has occurred. In this case, a cycle in. the graph is a necessary but not a sufficient condition for the existence of deadlock.

Methods for Handling deadlocks

To use a protocol to prevent or avoid deadlocks, ensuring that the system will never enter a deadlocked state.

To allow the system to enter a deadlocked state, detect it, and recover.

Ignore the problem altogether and pretend that deadlocks never occur in the system. (One used by most operating systems, including UNIX and Windows)

To ensure that deadlocks never occur, the system can use a deadlock prevention or a deadlock-avoidance scheme. Deadlock prevention provides a set of methods for ensuring that at least one of the necessary conditions cannot hold. These methods prevent deadlocks by constraining how requests for resources can be made.

Deadlock Avoidance requires that the operating system be given in advance additional information concerning which resources a process will request and use during its lifetime.

With this additional knowledge, it can decide for each request whether or not the process should wait. To decide whether the current request can be satisfied or must be delayed, the system must consider the resources currently available, the resources currently allocated to each process, and the future requests and releases of each process.

If a system does not employ either a deadlock-prevention or a deadlock avoidance algorithm, then a deadlock situation may arise. In this environment, the system can provide an algorithm that examines the state of the system to determine whether a deadlock has occurred and an algorithm to recover from the deadlock.

Deadlock Prevention

For a deadlock to occur, each of the four necessary conditions must hold. By ensuring that at least one of these conditions cannot hold, we can prevent the occurrence of a deadlock.

How to ensure Mutual Exclusion does not occur ?

Mutual-exclusion condition must hold for non-sharable resources.

Sharable resources, in contrast, do not require mutually exclusive access and thus cannot be involved in a deadlock.

Read-only files are a good example of a sharable resource. A process never needs to wait for a sharable resource.

In general, however, we cannot prevent deadlocks by denying the mutual-exclusion condition, because some resources are intrinsically non-sharable.

How to ensure Hold and Wait Condition does not occur ?

To ensure that the hold-and-wait condition never occurs in the system, we must guarantee that, whenever a process requests a resource, it does not hold any other resources.

It can be implemented this provision by requiring that system calls requesting resources for a process precede all other system calls.

An alternative protocol allows a process to request resources only when it has none. A process may request some resources and use them. Before it can request any additional resources, however, it must release all the resources that it is currently allocated.

Example : Under protocol 1 if a task has to copy data from a DVD drive to a file on disk, sorts the file, and then prints the results to a printer. If all resources must be requested at the beginning of the process, then the process must initially request the DVD drive, disk file, and printer. It will hold the printer for its entire execution, even though it needs the printer only at the end

The second method allows the process to request initially only the DVD drive and disk file. It copies from the DVD drive to the disk and then releases both the DVD drive and the disk file. The process must then again request the disk file and the printer. After copying the disk file to the printer, it releases these two resources and terminates.

Both these protocols have two main disadvantages. First, resource utilization may be low, since resources may be allocated but unused for a long period.

Second, starvation is possible. A process that needs several popular resources may have to wait indefinitely, because at least one of the resources that it needs is always allocated to some other process.

Q. Ensure that No Preemption condition does not occur ?

If a process is holding some resources and requests another resource that cannot be immediately allocated to it (that is, the process must wait), then all resources the process is currently holding are preempted i.e. released.

The preempted resources are added to the list of resources for which the process is waiting. The process will be restarted only when it can regain its old resources, as well as the new ones that it is requesting.

Alternatively, if a process requests some resources, we first check whether they are available. If they are, we allocate them. If they are not, we check whether they are allocated to some other process that is waiting for additional resources.

If so, we preempt the desired resources from the waiting process and allocate them to the requesting process. If the resources are neither available nor held by a waiting process, the requesting process must wait.

While it is waiting, some of its resources may be preempted, but only if another process requests them.

A process can be restarted only when it is allocated the new resources it is requesting and recovers any resources that were preempted while it was waiting.

This protocol is often applied to resources whose state can be easily saved and restored later, such as CPU registers and memory space. It cannot generally be applied to such resources as printers and tape drives.

Q. Ensure that Circular Wait condition does not occur ?

One solution is to impose a total ordering of all resource types and to require that each process requests resources in an increasing order of enumeration.

Let R = { R1, R2, ... , Rm} be the set of resource types, Each process can request resources only in an increasing order of enumeration. That is, a process can initially request any number of instances of a resource type - say, Ri. After that, the process can request instances of resource type Rj if and only if F(Rj) > F(Ri).

Alternatively, we can require that a process requesting an instance of resource type Rj must have released any resources Ri; such that F(Ri) >= F(Rj). It must also be noted that if several instances of the same resource type are needed, a single request for all of them must be issued.

In the above protocol, a Circular Wait condition does not occur.