Contiguous Memory Allocation

The main memory must accommodate both the operating system and the various user processes. We therefore need to allocate main memory in the most efficient way possible - contiguous memory allocation.

The memory is usually divided into two partitions: one for the resident operating system and one for the user processes.

We usually want several user processes to reside in memory at the same time. We therefore need to consider how to allocate available memory to the processes that are in the input queue waiting to be brought into memory.

In. contiguous memory allocation, each process is contained in a single contiguous section of memory.

Memory Mapping and Protection

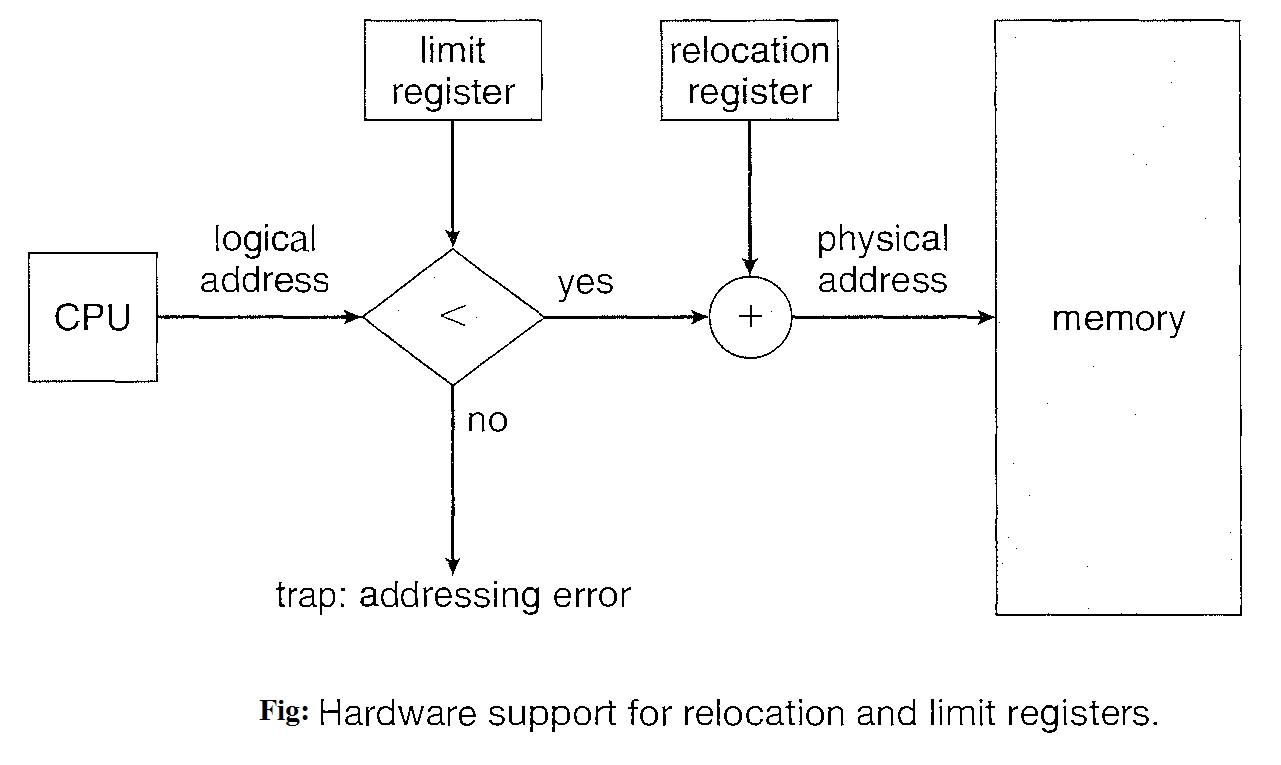

The relocation register contains the value of the smallest physical address; the limit register contains the range of logical addresses (for example, relocation= 100040 and limit= 74600).

With relocation and limit registers, each logical address must be less than the limit register; the MMU maps the logical address dynamically by adding the value in the relocation register. This mapped address is sent to memory.

When the CPU scheduler selects a process for execution, the dispatcher loads the relocation and limit registers with the correct values as part of the context switch.

Because every address generated by a CPU is checked against these registers, we can protect both the operating system and the other users' programs and data from being modified by this running process.

Memory Allocation

One of the simplest methods for allocating memory is to divide memory into several fixed-sized "partitions".

Each partition may contain exactly one process.

Thus, the degree of multi-programming is bound by the number of partitions. In this "multi-partition system" when a partition is free, a process is selected from the input queue and is loaded into the free partition.

When the process terminates, the partition becomes available for another process.

Variable partitioning allocation

The operating system keeps a table indicating which parts of memory are available and which are occupied.

Initially, all memory is available for user processes and is considered one large block of available memory - "a hole".

As processes enter the system, they are put into an input queue. The operating system takes into account the memory requirements of each process and the amount of available memory space in determining which processes are allocated memory.

When a process is allocated space, it is loaded into memory, and it can then compete for CPU time. When a process terminates, it releases its memory which the operating system may then fill with another process from the input queue.

At any given time, then, we have a list of available block sizes and an input queue. The operating system can order the input queue according to a scheduling algorithm.

Memory is allocated to processes until finally, the memory requirements of the next process cannot be satisfied -that is, no available block of memory (or hole) is large enough to hold that process.

The operating system can then wait until a large enough block is available, or it can skip down the input queue to see whether the smaller memory requirements of some other process can be met.

In general as mentioned, the memory blocks available comprise a set of holes of various sizes scattered throughout memory. When a process arrives and needs memory, the system searches the set for a hole that is large enough for this process.

If the hole is too large, it is split into two parts. One part is allocated to the arriving process; the other is returned to the set of holes. When a process terminates, it releases its block of memory, which is then placed back in the set of holes.

If the new hole is adjacent to other holes, these adjacent holes are merged to form one larger hole. At this point, the system may need to check whether there are processes waiting for memory and whether this newly freed and recombined memory could satisfy the demands of any of these waiting processes.

This procedure is a particular instance of the general "Dynamic storage allocation problem".

Dynamic storage allocation problem

Given a list of processes how to satisfy the request by allocating the optimum region to it.

First fit :

Allocate the first hole that is big enough.

enough. Searching can start either at the beginning of the set of holes or at the location where the previous first-fit search ended.

We can stop searching as soon as we find a free hole that is large enough.

Best fit :

Allocate the smallest hole that is big enough. We must search the entire list, unless the list is ordered by size. This strategy produces the smallest leftover hole.

Worst fit

Allocate the largest hole. Again, we must search the entire list, unless it is sorted by size.

This strategy produces the largest leftover hole, which may be more useful than the smaller leftover hole from a best-fit approach.

This strategy produces the largest leftover hole, which may be more useful than the smaller leftover hole from a best-fit approach.

Fragmentation

Both the first-fit and best-fit strategies for memory allocation suffer from external fragmentation As processes are loaded and removed from memory, the free memory space is broken into little pieces.

External fragmentation exists when there isenough total memory space to satisfy a request but the available spaces are not contiguous; storage is fragmented into a large number of small holes.

In the worst case, we could have a block of free (or wasted) memory between every two processes. If all these small pieces of memory were in one big free block instead, we might be able to run several more processes.

Statistical analysis of first fit reveals that, even with some optimization, given N allocated blocks, another 0.5 N blocks will be lost to fragmentation. This property is known as the 50 percent rule.

Internal Fragmentation

Memory fragmentation can be internal as well as external. Consider a multiple-partition allocation scheme with a hole of 18,464 bytes.

Suppose that the next process requests 18,462 bytes. If we allocate exactly the requested block, we are left with a hole of 2 bytes.

The overhead to keep track of this hole will be substantially larger than the hole itself.

The general approach to avoiding this problem is to break the physical memory into fixed-sized blocksand allocate memory in units based on block size.

With this approach, the memory allocated to a process may be slightly larger than the requested memory. The difference between these two numbers is internal fragmentation - unused memory that is internal to a partition.

Compaction

The goal is to shuffle the memory contents so as to place all free memory together in one large block.

The simplest compaction algorithm is to move all processes toward one end of memory; all holes move in the other direction, producing one large hole of available memory. This scheme can be expensive.

Another possible solution to the external-fragmentation problem is to permit the logical address space of the processes to be non-contiguous, thus allowing a process to be allocated physical memory wherever such memory is available. Two complementary techniques achieve this solution: paging and segmentation .

Memory-management scheme - Paging

It is a memory-management scheme that permits the physical address space a process to be non-contiguous. Paging avoids external fragmentation and the need for compaction.

The basic method for implementing paging involves :

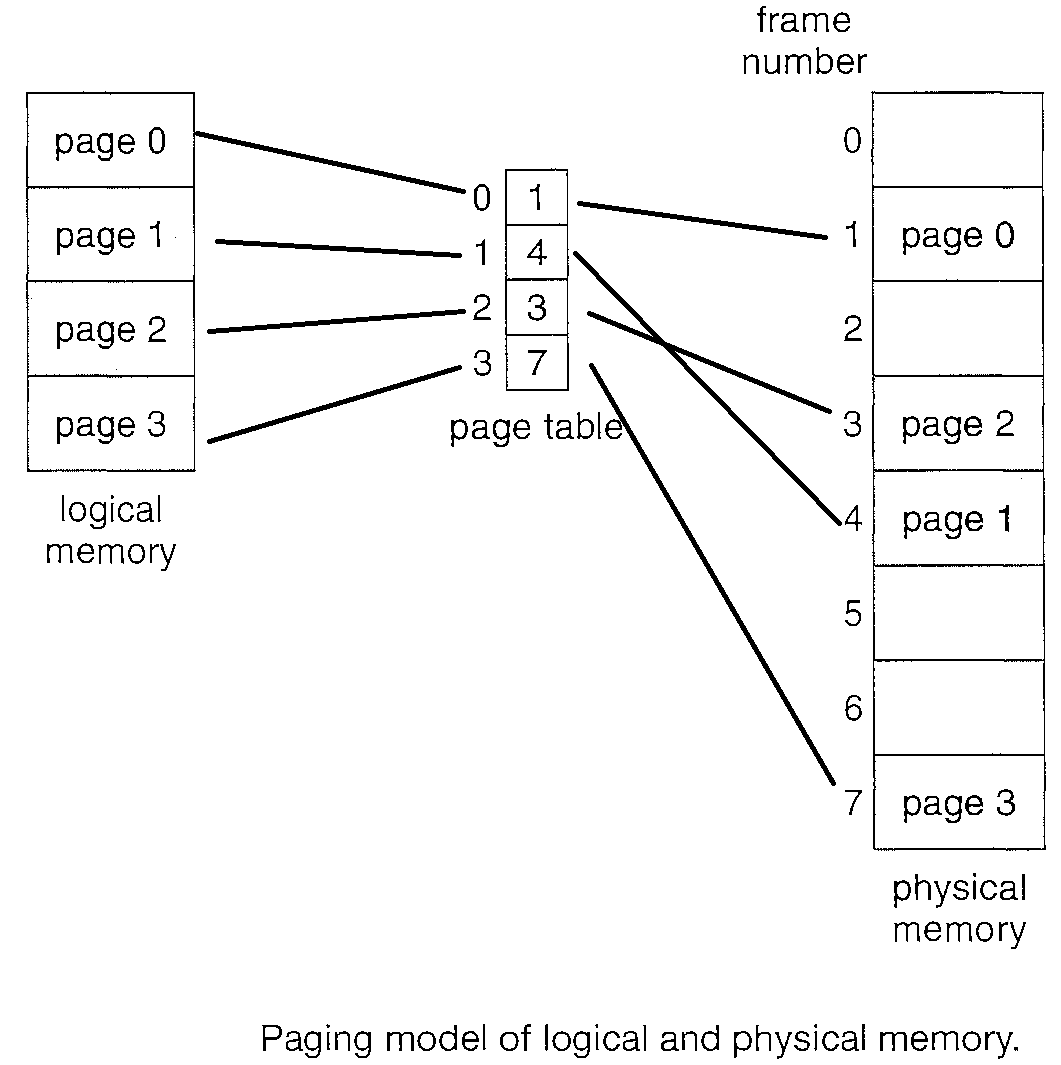

We initialize by breaking physical memory into fixed-sized blocks called frames and breaking logical memory into blocks of the same size called Pages.

When a process is to be executed, its pages are loaded into any available memory frames from their source.

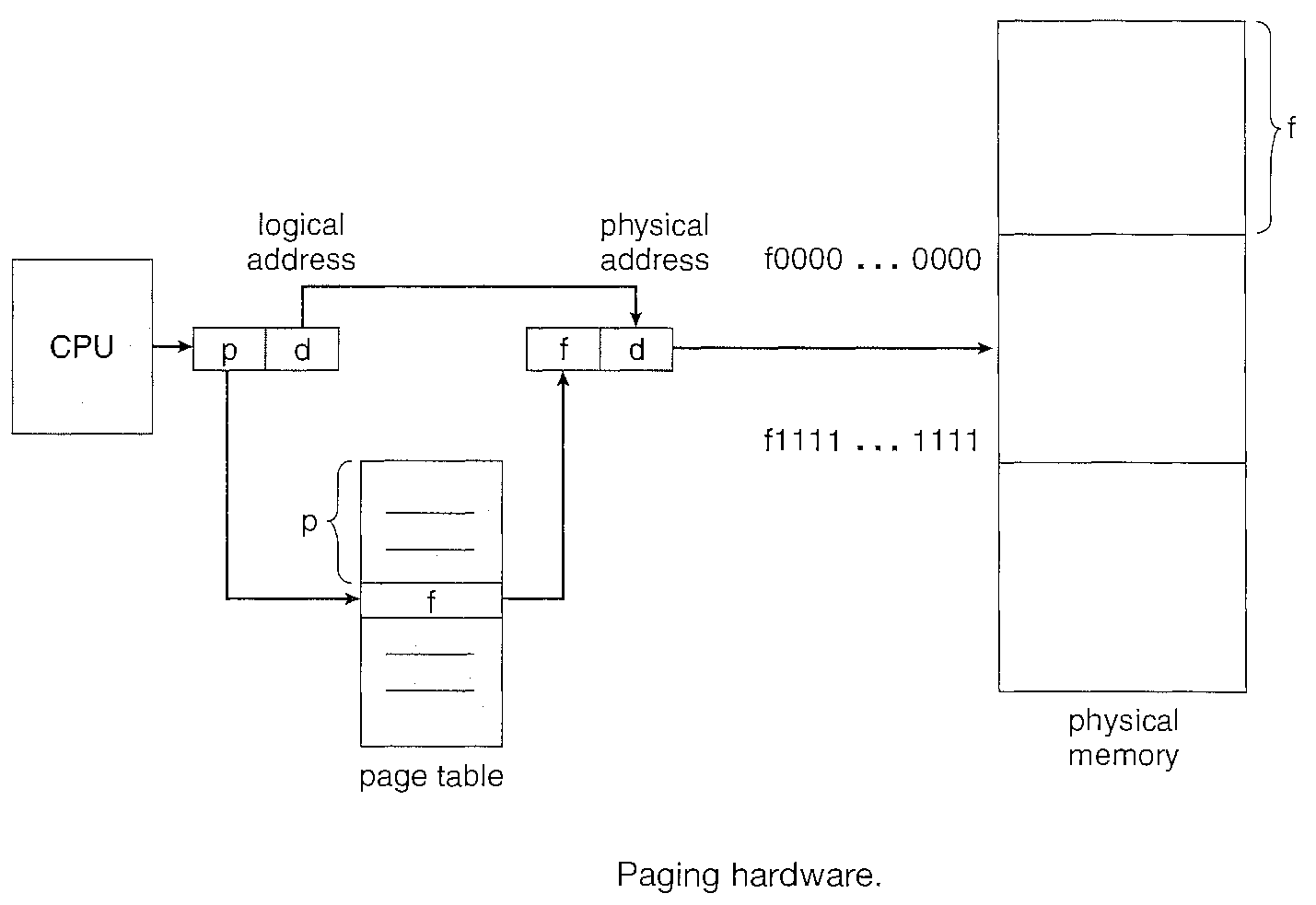

Every address generated the CPU is divided into two parts: Page table and a Page offset . The page number is used as an index into a Page table.

The page table contains the base address of each page in physical memory. This base address is combined with the page offset to define the physical memory address that is sent to the memory unit.

The page size (like the frame size) is defined by the hardware. The size of a page is typically a power of 2, varying between 512 bytes and 16 MB per page, depending on the computer architecture.

If the size of the logical address space is 2m, and a page size is 2n addressing units then the high-order m - n bits of a logical address designate the page number, and the "n" low-order bits designate the page offset.

Mapping pages to frames

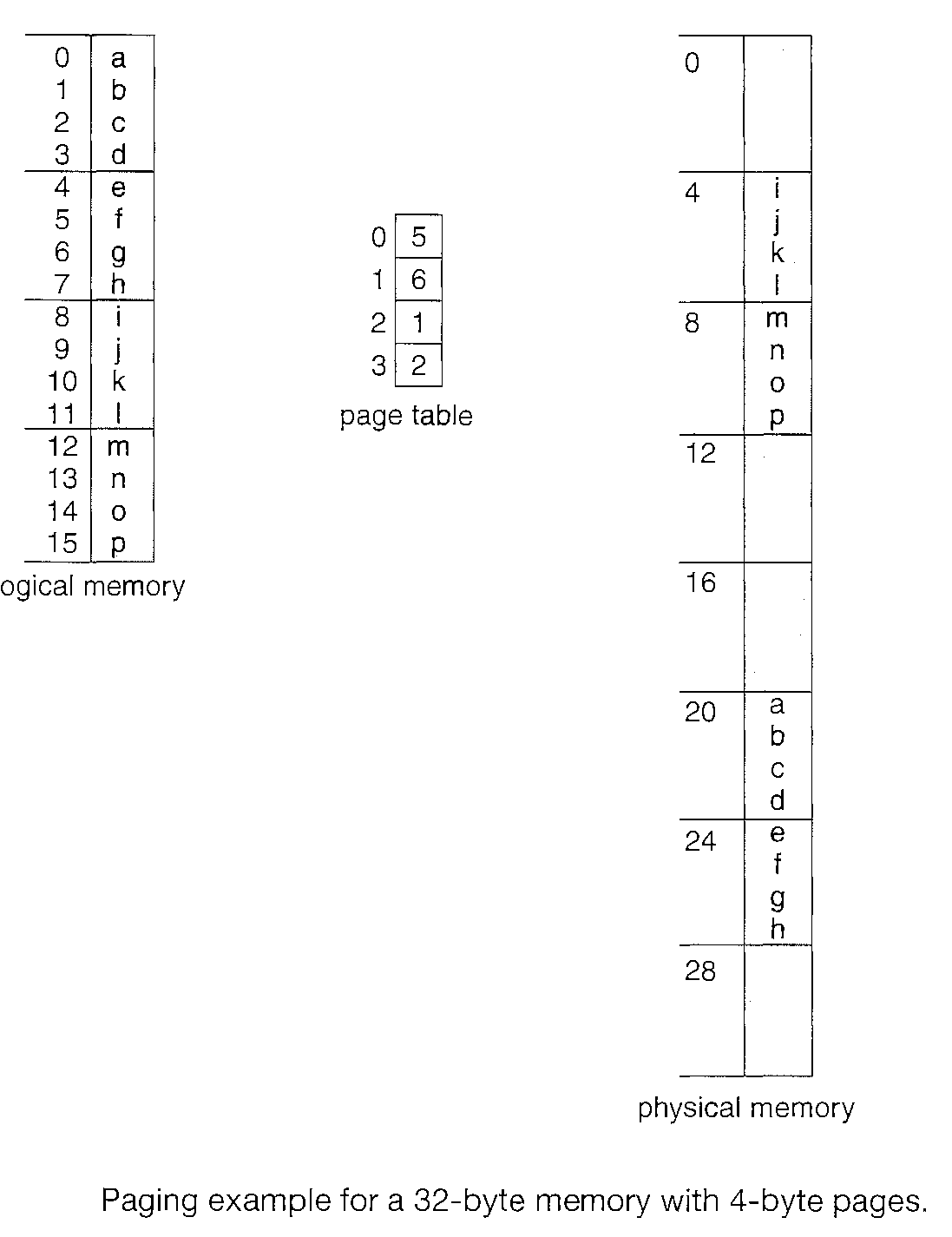

In below figure, in the logical address we have n = 2 (Page size is 2n) and m = 4 (logical address space is 2m). Using a page size of 4 bytes and a physical memory of 32 bytes (8 pages).

Logical address 0 is page 0, offset 0. Indexing into the page table, we find that page 0 is in frame 5.

Thus, logical address 0 maps to physical address 20 [= (5 x 4) + 0]. Logical address 3 (page 0, offset 3) maps to physical address 23 [ = (5 x 4) + 3].

Logical address 4 is page 1, offset 0; according to the page table, page 1 is mapped to frame 6. Thus, logical address 4 maps to physical address 24 [ = ( 6 x 4) + 0]. Logical address 13 maps to physical address 9 [ = (2 x 4) + 1].

Internal Fragmentation and Frame Table

When we use a paging scheme, we have no external fragmentation: any free frame can be allocated to a process that needs it. However, we may have some internal fragmentation.

For example, if page size is 2,048 bytes, a process of 72,766 bytes will need 35 pages plus 1,086 bytes. It will be allocated 36 frames, resulting in internal fragmentation of 2,048 - 1,086 = 962 bytes.

When a process arrives in the system to be executed, its size, expressed in pages, is examined. Each page of the process needs one frame. Thus, if the process requires 11 pages, at least 11 frames must be available in memory. If n frames are available, they are allocated to this arriving process. The first page of the process is loaded into one of the allocated frames, and the frame number is put in the page table for this process. The next page is loaded into another frame, its frame number is put into the page table, and so on

The user program views memory as one single space, containing only this one program. In fact, the user program is scattered throughout physical memory.

Since the operating system is managing physical memory, it must be aware of the allocation details of physical memory-which frames are allocated, which frames are available, how many total frames there are, and so on. This information is generally kept in a data structure called a frame table

Paging however increases the context-switch time.