Translation Look-aside Buffer

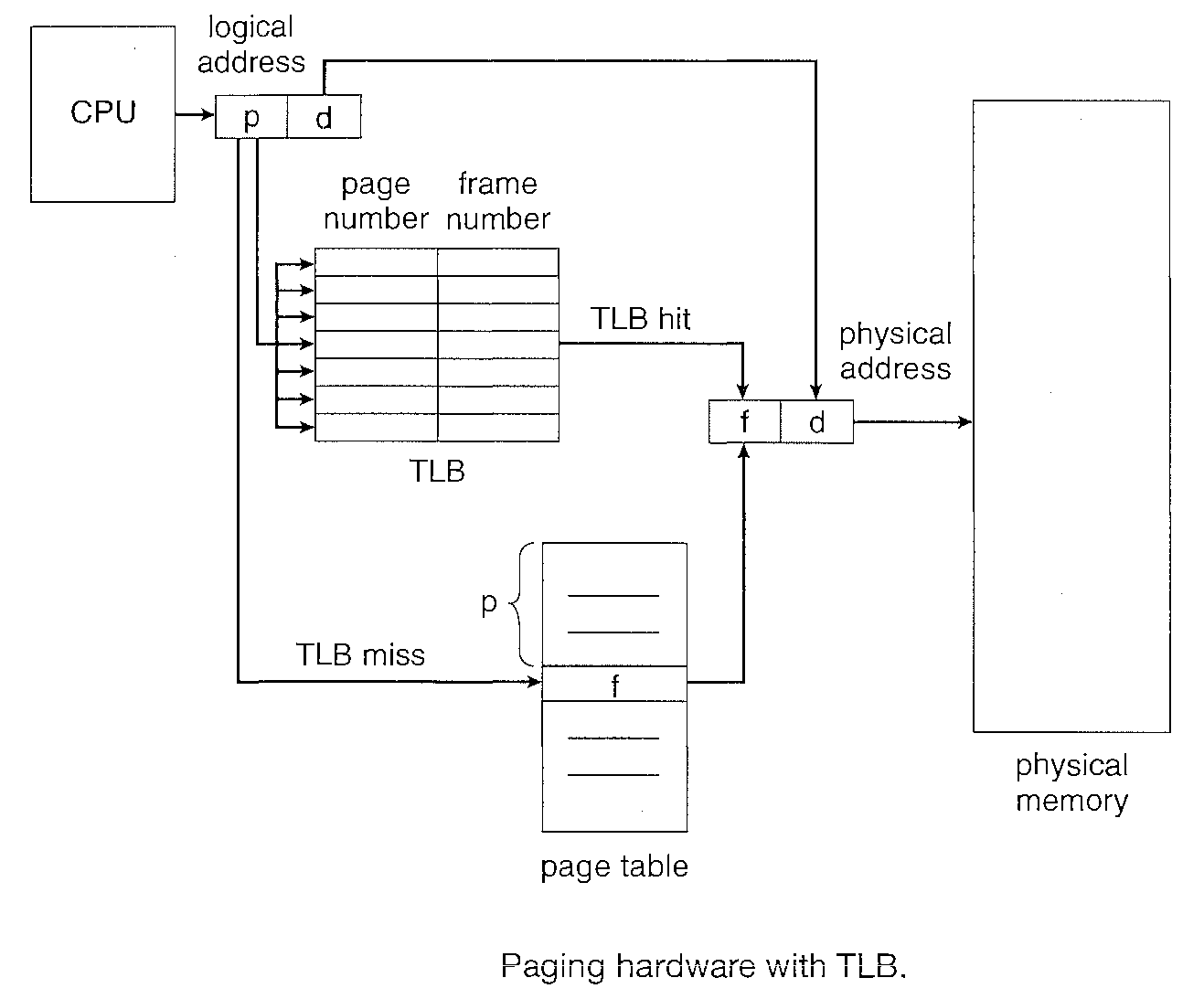

Process pages are mapped to frames in the memory however the mapping and address translation of pages to frames is costly and requires memory access. This delay is intolerable and hence the need to use a better solution which is named as Translation Look-aside Buffer (a special, small, fast lookup hardware cache).

As shown in the figure above, page to frame mapping is faster. However as this is a costly solution very few entries can be kept in the TLB compared to a page table. Typically, the number of entries in a TLB is small, often numbering between 64 and 1,024.

The TLB is used with page tables in the following way. The TLB contains only a few of the page-table entries. When a logical address is generated by the CPU, its page number is presented to the TLB.

If the page number is found, its frame number is immediately available and is used to access memory. The whole task may take less than 10 percent longer than it would if an unmapped memory reference were used.

If the page number is not in the TLB (known as a a memory miss) a reference to the page table must be made. When the frame number is obtained, we can use it to access memory. In addition, we add the page number and frame number to the TLB, so that they will be found quickly on the next reference. If the TLB is already full of entries, the operating system must select one for replacement.

The percentage of times that a particular page number is found in the TLB is called the Hit Ratio. An 80-percent hit ratio, for example, means that we find the desired page number in the TLB 80 percent of the time.

Segmentation - Memory Management Technique

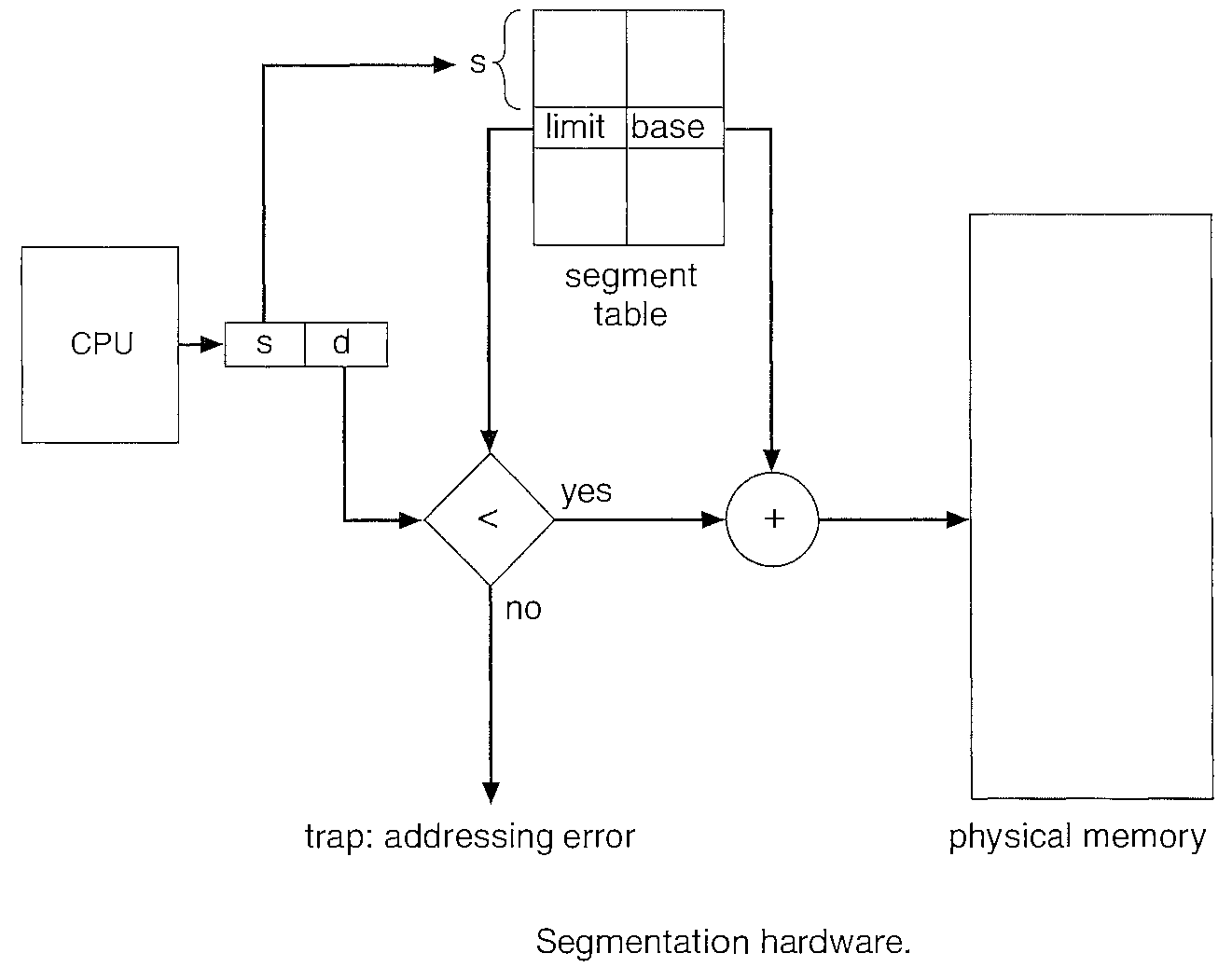

Segmentation procedure is done by use of a Segment Table. This maps two dimensional user-defined addresses into one-dimensional physical addresses.

Each entry in the segment table has a segment base and a segment limit. The segment base contains the starting physical address where the segment resides in memory, and the segment limit specifies the length of the segment.

The use of a segment table is illustrated in Figure above. A logical address consists of two parts: a segment number, "s", and an offset into that segment, "d". The segment number is used as an index to the segment table.

The offset "d" of the logical address must be between 0 and the segment limit. If it is not, we trap to the operating system (logical addressing attempt beyond end of segment). When an offset is legal, it is added to the segment base to produce the address in physical memory of the desired byte. The segment table is thus essentially an array of base-limit register pairs.

Demonstrate Segmentation

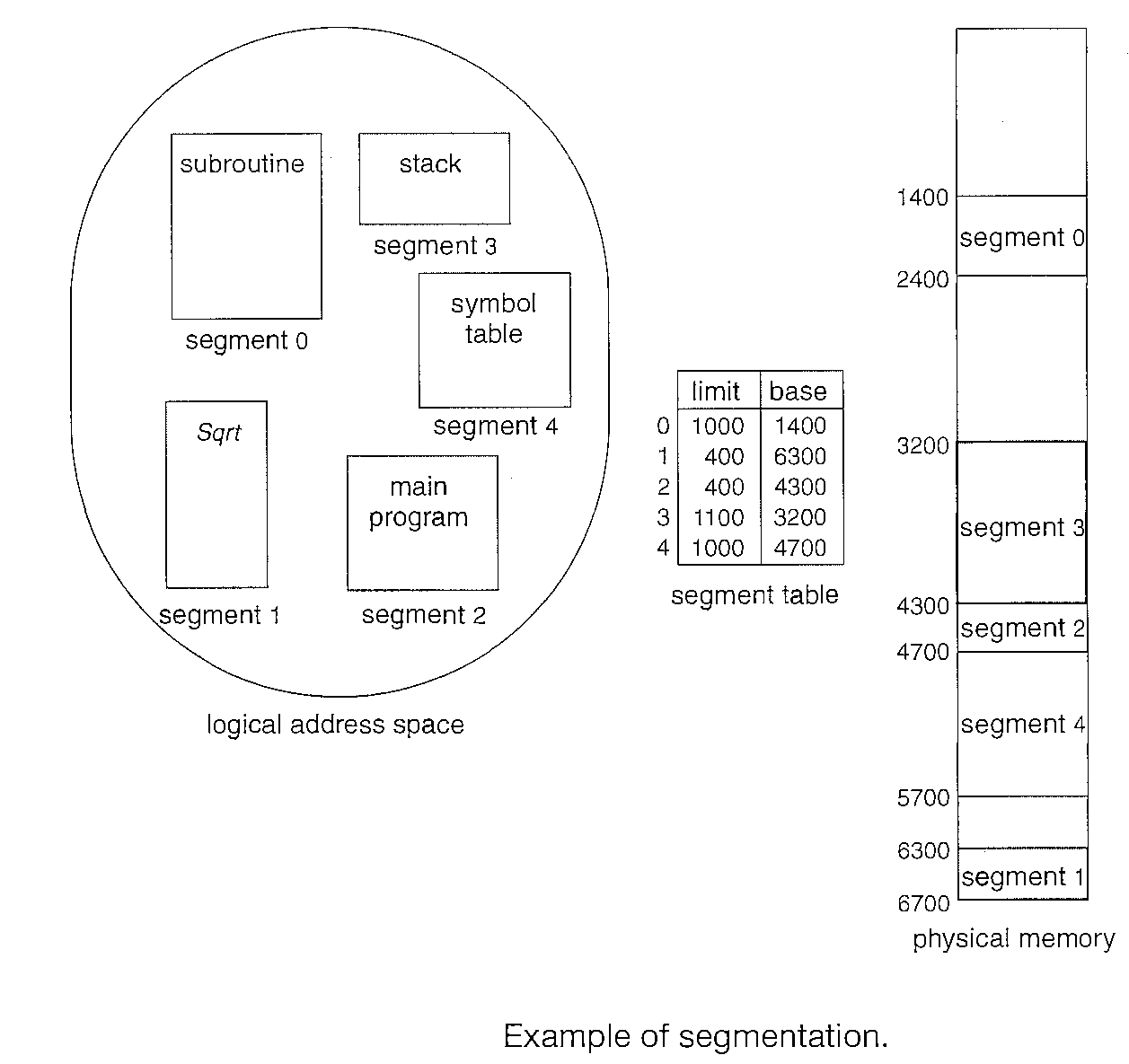

Consider the situation shown in Figure below. We have five segments numbered from 0 through 4.

The segments are stored in physical memory as shown. The segment table has a separate entry for each segment, giving the beginning address of the segment in physical memory (or base) and the length of that segment (or limit).

For example, segment 2 is 400 bytes long and begins at location 4300. Thus, a reference to byte 53 of segment 2 is mapped onto location 4300 + 53 = 4353.

A reference to segment 3, byte 852, is mapped to 3200 (the base of segment 3) + 852 = 4052.

A reference to byte 1222 of segment 0 would result in a trap to the operating system, as this segment is only 1000 bytes long.

Virtual Memory

Virtual memory is a technique that allows the execution of processes that are not completely in memory.

One major advantage of this scheme is that programs can be larger than physical memory.

Further, virtual memory abstracts main memory into an extremely large, uniform array of storage, separating logical memory as viewed by the user from physical memory.

The instructions being executed must be in physical memory. But it is also unfortunate, since it limits the size of a program to the size of physical memory. In fact, an examination of real programs shows us that, in many cases, the entire program is not needed.

Thus we need the ability to execute a program that is only partially in memory would confer many benefits.

If each user program could take less physical memory, more programs could be run at the same time, with a corresponding increase in CPU utilization and throughput but with no increase in response time or turnaround time.

Thus, running a program that is not entirely in memory would benefit both the system and the user.

Demand Paging

If an executable program is to be loaded from disk into memory. One option is to load the entire program in physical memory at program execution time.

However, a problem with this approach is that we may not initially need the entire program in memory.

An alternative strategy is to load pages only as they are needed. This technique is known as Demand paging and is commonly used in virtual memory systems.

With demand-paged virtual memory, pages are only loaded when they are demanded during program execution; pages that are never accessed are thus never loaded into physical memory.

We use a lazy swapper which never swaps a page into memory unless that page will be needed. Since we are now viewing a process as a sequence of pages, rather than as one large contiguous address space, use of the term swapper is technically incorrect.

A swapper manipulates entire processes, whereas a Pager is concerned with the individual pages of a process. We thus use pager, rather than swapper, in connection with demand paging.

Performance of Demand Paging - effective access time

Let "ma" be the memory-access time

Let p be the probability of a page fault (0 ≤ p ≤ 1).

We would expect "p" to be close to zero-that is, we would expect to have only a few page faults.

effective access time = (1 - p) x ma + p x page fault time.

With an average page-fault service time of 8 milliseconds and a memory access time of 200 nanoseconds, the effective access time in nanoseconds is

effective access time = (1 - p) x (200) + p * (8 milliseconds) = 200 + 7,999,800 * p.

The effective access time is directly proportional to the Page fault rate. If one access out of 1,000 causes a page fault, the effective access time is 8.2 microseconds.

Page replacement Algorithms

To reduce page faults it is important that the Pager swaps out pages which are not needed.

Page replacement takes the following approach. If no frame is free, we find one that is not currently being used and free it. We can free a frame by writing its contents to swap space and changing the page table (and all other tables) to indicate that the page is no longer in memory.

We can now use the freed frame to hold the page for which the process faulted.

Page replacement routines is as followed:

Find the location of the desired page on the disk.

Find a free frame: If there is a free frame, use it. If there is no free frame, use a page-replacement algorithm to select a Victim frame. Write the victim frame to the disk; change the page and frame tables accordingly.

Read the desired page into the newly freed frame; change the page and frame tables.

Restart the user process.

Every operating system probably has its own replacement scheme. How do we select a particular replacement algorithm? In general, we want the one with the lowest page-fault rate.