Simple Convolutional Network Example

In the last section, you saw the building blocks of a single layer, of a single convolution layer in the ConvNet. Now let's go through a concrete example of a deep convolutional neural network. And this will give you some practice with the notation that we introduced toward the end of the last section as well.

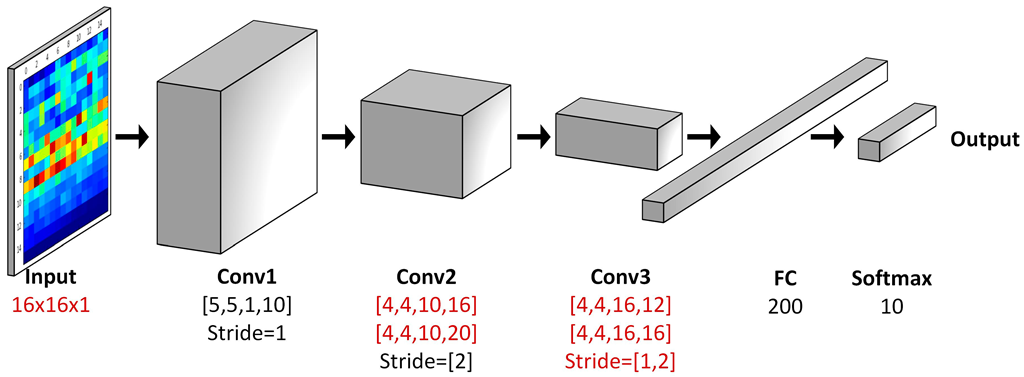

Let's say you have an image, and you want to do image classification, or image recognition. Where you want to take as input an image, x, and decide is this a cat or not, 0 or 1, so it's a classification problem.

Let's build an example of a ConvNet you could use for this task. For the sake of this example, I'm going to use a fairly small image. Let's say this image is 39 x 39 x 3 \( {n_W}^{[0]} = 39, {n_H}^{[0]} = 39, {n_C}^{[1]} = 3 \). This choice just makes some of the numbers work out a bit better.

And so, \({n_H}\) in layer 0 will be equal to \(n_w\) height and width are equal to 39 and the number of channels and layer 0 is equal to 3. Let's say the first layer uses a set of 3 by 3 filters to detect features, so f = 3 or really \(f^{[1]}\) = 3, because we're using a 3 by 3 filter.

And let's say we're using a stride of 1 \( s^{[1]} = 1 \), and no padding \( p^{[1]} = 0 \). So using a same convolution, and let's say you have 10 filters \( {n_C}^{[2]} = 10 \). We know that number of filters in layer 1 is equal to number of channels in the output at layer 2.

Then the activations in this next layer of the neutral network will be 37 x 37 x 10 \( {n_W}^{[1]} = 37, {n_H}^{[1]} = 37, {n_C}^{[2]} = 10 \), and this 10 comes from the fact that you use 10 filters.

And 37 comes from this formula \( {n_W}^{[1]} = \left \lfloor \frac{{n_W}^{[0]} + 2p^{[1]} - f^{[1]}}{s^{[1]}} + 1 \right \rfloor \\ {n_H}^{[1]} = \left \lfloor \frac{{n_H}^{[0]} + 2p^{[1]} - f^{[1]}}{s^{[1]}} + 1 \right \rfloor \) .

Right, then I guess you have 39 + 0- 3 over 1 + 1 that's = to 37. So that's why the output is 37 by 37, it's a valid convolution and that's the output size.

Let's say you now have another convolutional layer and let's say this time you use 5 by 5 filters. So, in our notation \(f^{[2]}\) at the next neural network = 5, and let's say use a stride of 2 \( s^{[2]} = 2 \) this time. And maybe you have no padding \(p^{[2]} = 0\) and say, 20 filters \( {n_C}^{[3]} = 20 \).

So then the output of this will be another volume, this time it will be 17 x 17 x 20. Notice that, because you're now using a stride of 2, the dimension has shrunk much faster. 37 x 37 has gone down in size by slightly more than a factor of 2, to 17 x 17. And because you're using 20 filters, the number of channels now is 20.

So it's this activation \(a^{[2]}\) would be that dimension \( {n_W}^{[2]} = 37, {n_H}^{[2]} = 37, {n_C}^{[2]} = 10 \). All right, let's apply one last convolutional layer. So let's say that you use a 5 by 5 filter again, and again, a stride of 2. So if you do that, I'll skip the math, but you end up with a 7 x 7, and let's say you use 40 filters, no padding, 40 filters.

You end up with 7 x 7 x 40. So now what you've done is taken your 39 x 39 x 3 input image and computed your 7 x 7 x 40 features for this image. And then finally, what's commonly done is if you take this 7 x 7 x 40, 7 times 7 times 40 is actually 1,960.

And so what we can do is take this volume and flatten it or unroll it into just 1,960 units. Just flatten it out into a vector, and then feed this to a logistic regression unit, or a softmax unit.

Depending on whether you're trying to recognize or trying to recognize any one of different objects and then just have this give the final predicted output for the neural network.

So just be clear, this last step is just taking all of these numbers, all 1,960 numbers, and unrolling them into a very long vector. So then you just have one long vector that you can feed into softmax until it's just a regression in order to make prediction for the final output.

So this would be a pretty typical example of a ConvNet.

A lot of the work in designing convolutional neural net is selecting hyperparameters like these, deciding what's the total size? What's the stride? What's the padding and how many filters are used?

But for now, maybe one thing to take away from this is that as you go deeper in a neural network, typically you start off with larger images, 39 by 39. And then the height and width will stay the same for a while and gradually trend down as you go deeper in the neural network. It's gone from 39 to 37 to 17 to 7.

Whereas the number of channels will generally increase. It's gone from 3 to 10 to 20 to 40, and you see this general trend in a lot of other convolutional neural networks as well.

So we'll get more guidelines about how to design these parameters in later videos. But you've now seen your first example of a convolutional neural network, or a ConvNet for short. So congratulations on that.

And it turns out that in a typical ConvNet, there are usually three types of layers. One is the convolutional layer, and often we'll denote that as a Conv layer. And that's what we've been using in the previous network. It turns out that there are two other common types of layers that you haven't seen yet but we'll talk about in the next couple of videos.

One is called a pooling layer, often I'll call this pool. And then the last is a fully connected layer called FC. And although it's possible to design a pretty good neural network using just convolutional layers, most neural network architectures will also have a few pooling layers and a few fully connected layers.

Fortunately pooling layers and fully connected layers are a bit simpler than convolutional layers to define.

So we'll do that quickly in the next two sections and then you have a sense of all of the most common types of layers in a convolutional neural network. And you will put together even more powerful networks than the one we just saw.

So congrats again on seeing your first full convolutional neural network. We'll also discuss later in this week about how to train these networks, but first let's talk briefly about pooling and fully connected layers. And then training these, we'll be using back propagation, which you're already familiar with. But in the next section, let's quickly go over how to implement a pooling layer for your ConvNet.