Word Representation

In this section, you see how many of the ideas like RNN, LSTM, GRU can be applied to NLP, to Natural Language Processing, which is one of the features of AI because it's really being revolutionized by deep learning.

One of the key ideas you learn about is word embeddings, which is a way of representing words.

The less your algorithms automatically understand analogies like that, man is to woman, as king is to queen, and many other examples.

And through these ideas of word embeddings, you'll be able to build NPL applications, even with models the size of, usually of relatively small label training sets.

Finally towards the end of the course, you'll see how to debias word embeddings. That's to reduce undesirable gender or ethnicity or other types of bias that learning algorithms can sometimes pick up.

So with that, let's get started with a discussion on word representation. So far, we've been representing words using a vocabulary of words, and a vocabulary from the previous section might be say, 10,000 words.

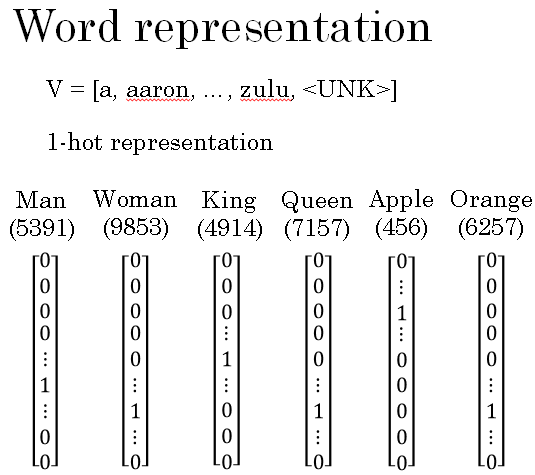

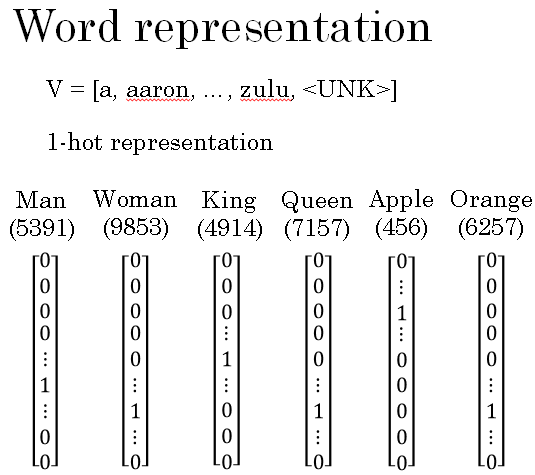

And we've been representing words using a one-hot vector. So for example, if man is word number 5391 in this dictionary, then you represent him with a vector with one in position 5391.

And I'm also going to use O5391 to represent this factor, where O here stands for one-hot.

And then, if woman is word number 9853, then you represent it with O9853 which just has a one in position 9853 and zeros elsewhere. And then other words king, queen, apple, orange will be similarly represented with one-hot vector.

One of the weaknesses of this representation is that it treats each word as a thing unto itself, and it doesn't allow an algorithm to easily generalize the cross words.

For example, let's say you have a language model that has learned that when you see "I want a glass of orange ___________".

Well, what do you think the next word will be? Very likely, it'll be juice.

But even if the learning algorithm has learned that I want a glass of orange juice is a likely sentence, if it sees I want a glass of apple ____________.

As far as it knows the relationship between apple and orange is not any closer as the relationship between any of the other words man, woman, king, queen, and orange.

And so, it's not easy for the learning algorithm to generalize from knowing that orange juice is a popular thing, to recognizing that apple juice might also be a popular thing or a popular phrase.

And this is because the any product between any two different one-hot vector is zero.

If you take any two vectors say, queen and king and any product of them, the end product is zero. If you take apple and orange and any product of them, the end product is zero.

And distance between any pair of these vectors is also the same.

So it just doesn't know that somehow apple and orange are much more similar than king and orange or queen and orange.

So, won't it be nice if instead of a one-hot presentation we can instead learn a featurized representation with each of these words, a man, woman, king, queen, apple, orange or really for every word in the dictionary, we could learn a set of features and values for each of them.

So for example, each of these words, we want to know what is the gender associated with each of these things.

So, if gender goes from minus one for male to plus one for female, then the gender associated with man might be minus one, for woman might be plus one.

And then eventually, learning these things maybe for king you get minus 0.95, for queen plus 0.97, and for apple and orange sort of genderless.

Another feature might be, well how royal are these things. And so the terms, man and woman are not really royal, so they might have feature values close to zero. Whereas king and queen are highly royal. And apple and orange are not really loyal.

How about age? Well, man and woman doesn't connotes much about age. Maybe men and woman implies that they're adults, but maybe neither necessarily young nor old. So maybe values close to zero. Whereas kings and queens are always almost always adults.

And apple and orange might be more neutral with respect to age. And then, another feature for here, is this is a food? Well, man is not a food, woman is not a food, neither are kings and queens, but apples and oranges are foods. And they can be many other features as well ranging from, what is the size of this? What is the cost?

Is this something that is a live? Is this an action, or is this a noun, or is this a verb, or is it something else? And so on. So you can imagine coming up with many features.

And I'm going to use the notation e5391 to denote a representation like this. And similarly, this vector, this 300 dimensional vector or 300 dimensional vector like this, I would denote e9853 to denote a 300 dimensional vector we could use to represent the word woman.

And similarly, for the other examples here. Now, if you use this representation to represent the words orange and apple, then notice that the representations for orange and apple are now quite similar.

Some of the features will differ because of the color of an orange, the color an apple, the taste, or some of the features would differ. But by a large, a lot of the features of apple and orange are actually the same, or take on very similar values.

And so, this increases the odds of the learning algorithm that has figured out that orange juice is a thing, to also quickly figure out that apple juice is a thing. So this allows it to generalize better across different words.

So over the next few sections, we'll find a way to learn words embeddings. We just need you to learn high dimensional feature vectors like these, that gives a better representation than one-hot vectors for representing different words.

And the features we'll end up learning, won't have a easy to interpret interpretation like that component one is gender, component two is royal, component three is age and so on.

Exactly what they're representing will be a bit harder to figure out. But nonetheless, the featurized representations we will learn, will allow an algorithm to quickly figure out that apple and orange are more similar than say, king and orange or queen and orange.

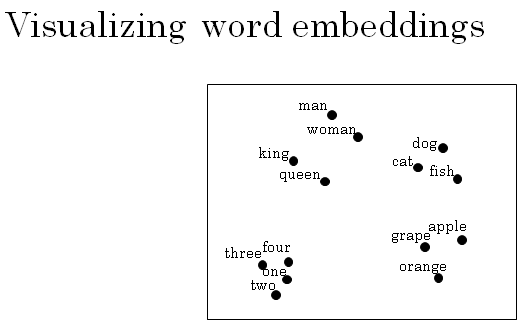

If we're able to learn a 300 dimensional feature vector or 300 dimensional embedding for each words, one of the popular things to do is also to take this 300 dimensional data and embed it say, in a two dimensional space so that you can visualize them.

And so, one common algorithm for doing this is the t-SNE algorithm due to Laurens van der Maaten and Geoff Hinton. And if you look at one of these embeddings, one of these representations, you find that words like man and woman tend to get grouped together, king and queen tend to get grouped together, and these are the people which tends to get grouped together.

Those are animals who can get grouped together. Fruits will tend to be close to each other. Numbers like one, two, three, four, will be close to each other. And then, maybe the animate objects as whole will also tend to be grouped together.

But you see plots like these sometimes on the internet to visualize some of these 300 or higher dimensional embeddings. And maybe this gives you a sense that, word embeddings algorithms like this can learn similar features for concepts that feel like they should be more related, as visualized by that concept that seem to you and me like they should be more similar, end up getting mapped to a more similar feature vectors.

And these representations will use these sort of featurized representations in maybe a 300 dimensional space, these are called embeddings. And the reason we call them embeddings is, you can think of a 300 dimensional space. And again, they can't draw out here in two dimensional space because it's a 3D one.

And what you do is you take every words like orange, and have a three dimensional feature vector so that word orange gets embedded to a point in this 300 dimensional space. And the word apple, gets embedded to a different point in this 300 dimensional space.

And of course to visualize it, algorithms like t-SNE, map this to a much lower dimensional space, you can actually plot the 2D data and look at it. But that's what the term embedding comes from. Word embeddings has been one of the most important ideas in NLP, in Natural Language Processing.

In this section, you saw why you might want to learn or use word embeddings. In the next section, let's take a deeper look at how you'll be able to use these algorithms, to build NLP algorithims.

And for the sake of the illustration let's say, 300 different features, and what that does is, it allows you to take this list of numbers, I've only written four above, but this could be a list of 300 numbers, that then becomes a 300 dimensional vector for representing the word man.