Beam Search

In this section, you learn about the beam search algorithm. In the last section, you remember how for machine translation given an input French sentence, you don't want to output a random English translation, you want to output the best and the most likely English translation.

The same is also true for speech recognition where given an input audio clip, you don't want to output a random text transcript of that audio, you want to output the best, maybe the most likely, text transcript.

Beam search is the most widely used algorithm to do this. And in this section, you see how to get beam search to work for yourself. Let's just try Beam Search using our running example of the French sentence, "Jane, visite l'Afrique en Septembre". Hopefully being translated into, "Jane, visits Africa in September".

The first thing Beam search has to do is try to pick the first words of the English translation, that's going to operate. So here I've listed, say, 10,000 words into vocabulary.

And to simplify the problem a bit, I'm going to ignore capitalization. So I'm just listing all the words in lower case.

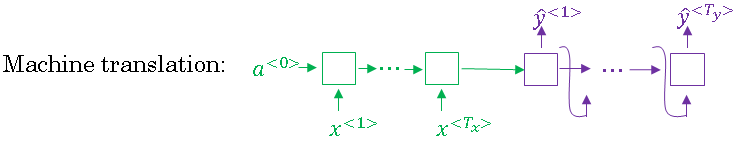

So, in the first step of Beam Search, I use the below network fragment with the encoder in green and decoder in purple, to try to evaluate what is the probability of that for a word. So, what's the probability of the first output y, given the input sentence x gives the French input.

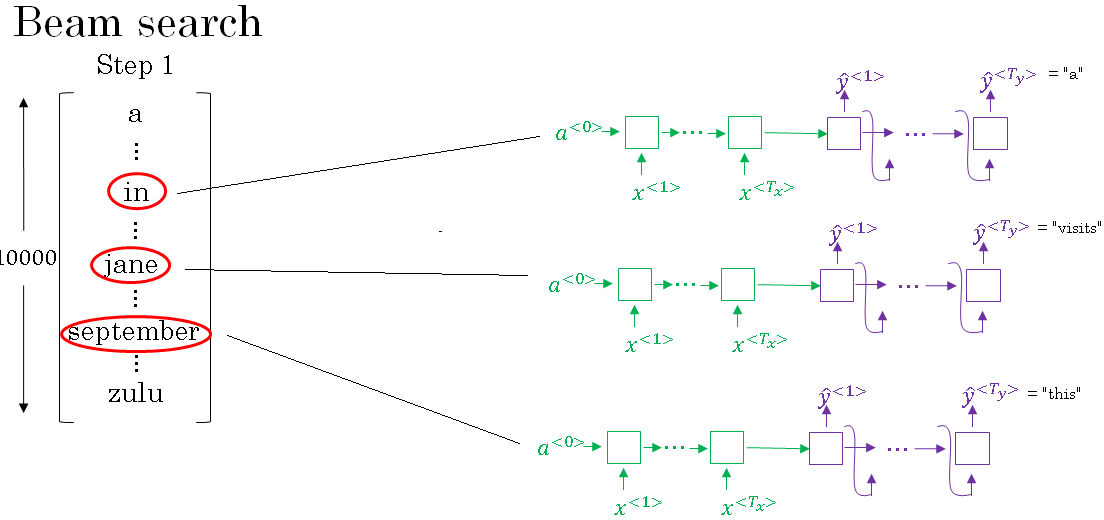

So, whereas greedy search will pick only the one most likely words and move on, Beam Search instead can consider multiple alternatives. So, the Beam Search algorithm has a parameter called B, which is called the beam width and for this example I'm going to set the beam width to be with the three.

And what this means is Beam search will cause that not just one possibility but consider three at the time. So in particular, let's say evaluating this probability over different choices the first words, it finds that the choices in, Jane and September are the most likely three possibilities for the first words in the English outputs.

Then Beam search will stowaway in computer memory that it wants to try all of three of these words, and if the beam width parameter were said differently, the beam width parameter was 10, then we keep track of not just three but of the ten, most likely possible choices for the first word.

So, to be clear in order to perform this first step of Beam search, what you need to do is run the input French sentence through this encoder network and then this first step will then decode the network, this is a softmax output overall 10,000 possibilities. Then you would take those 10,000 possible outputs and keep in memory which were the top three. Let's go into the second step of Beam search.

Having picked in, Jane and September as the three most likely choice of the first word, what Beam search will do now, is for each of these three choices consider what should be the second word.

So, to evaluate the probability of second word, it will use this new network fragments where encoder is in green and for the decoder portion when trying to decide what comes after the three selected first words.

So for this second step of beam search because we're continuing to use a beam width of three and because there are 10,000 words in the vocabulary you'd end up considering three times 10000 or thirty thousand possibilities.

And the outcome of this process hopefully will be that adding one word at a time that Beam search will decide that. Jane visits Africa in September will be terminated by the end of sentence symbol using that system is quite common. They'll find that this is a likely output English sentence and you'll see more details of this yourself.

In the programming exercise as well where you get to play with beam search yourself. So with a beam of three being searched considers three possibilities at a time.

Notice that if the beam width was said to be equal to one, say cause there's only one, then this essentially becomes the greedy search algorithm which we had discussed in the last section but by considering multiple possibilities say three or ten or some other number at the same time beam search will usually find a much better output sentence than greedy search.