RELATIONS AND FUNCTIONS

Empty relation is the relation R in X given by R = φ ⊂ X × X.

Universal relation is the relation R in X given by R = X × X.

Reflexive relation R in X is a relation with (a, a) ∈ R ∀ a ∈ X.

Symmetric relation R in X is a relation satisfying (a, b) ∈ R implies (b, a) ∈ R.

Transitive relation R in X is a relation satisfying (a, b) ∈ R and (b, c) ∈ R implies that (a, c) ∈ R

Equivalence relation R in X is a relation which is reflexive, symmetric and transitive.

Equivalence class [a] containing a ∈ X for an equivalence relation R in X is the subset of X containing all elements b related to a.

A function f : X → Y is one-one (or injective) if

f (x1) = f (x22) ⇒ x1 = x2 ∀ x1, x2 ∈ X.

A function f : X → Y is onto (or surjective) if given any y ∈ Y, ∃ x ∈ X such that f (x) = y.

A function f : X → Y is one-one and onto (or bijective), if f is both one-one and onto.

The composition of functions f : A → B and g : B → C is the function gof : A → C given by gof (x) = g(f (x)) ∀ x ∈ A

A function f : X → Y is invertible if ∃ g : Y → X such that gof = IX and fog = IY

A function f : X → Y is invertible if and only if f is one-one and onto.

Given a finite set X, a function f : X → X is one-one (respectively onto) if and only if f is onto (respectively one-one). This is the characteristic property of a finite set. This is not true for infinite set

A binary operation ∗ on a set A is a function ∗ from A × A to A.

n element e ∈ X is the identity element for binary operation ∗ : X × X → X, if a ∗ e = a = e ∗ a ∀ a ∈ X.

An element a ∈ X is invertible for binary operation ∗ : X × X → X, if there exists b ∈ X such that a ∗ b = e = b ∗ a where, e is the identity for the binary operation ∗. The element b is called inverse of a and is denoted by a-1

An operation ∗ on X is commutative if a ∗ b = b ∗ a ∀ a, b in X.

An operation ∗ on X is associative if (a ∗ b) ∗ c = a ∗ (b ∗ c) ∀ a, b, c in X

INVERSE TRIGONOMETRIC FUNCTIONS

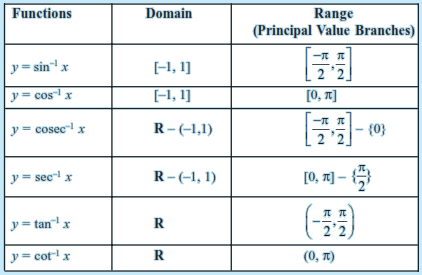

The domains and ranges (principal value branches) of inverse trigonometric functions are given in the following table:

sin-1x should not be confused with (sin x)-1. In fact, (sin x)-1 = $\frac{1}{sin x}$ and similarly for other trigonometric functions.

The value of an inverse trigonometric functions which lies in its principal value branch is called the principal value of that inverse trigonometric functions.

y = sin-1 x ⇒ x = sin y

x = sin y ⇒ y = sin-1 x

sin (sin-1 x) = x

sin -1(sin x) = x

sin-1 $\frac{1}{x}$ = cosec-1 x

cos-1 (-x) = π - cos-1 (x)

cos-1 $\frac{1}{x}$ = sec-1 x

cot-1 (-x) = π - cot-1 (x)

tan-1 $\frac{1}{x}$ = cot-1 x

sec-1 (-x) = π - sec-1 (x)

sin-1 (-x) = - sin-1x

tan-1 (-x) = - tan-1x

tan-1 (x) + cot-1 (x) = $\frac{\pi}{2}$

cosec-1 (-x) = - cosec-1x

sin-1 (x) + cos-1 (x) = $\frac{\pi}{2}$

cosec-1 (x) + sec-1 (x) = $\frac{\pi}{2}$

tan-1 (x) + tan-1 (y) = tan-1 $\frac{x+y}{1-xy}$

2tan-1 (x) = tan-1 $\frac{2x}{1-x^2}$

tan-1 (x) - tan-1 (y) = tan-1 $\frac{x-y}{1+xy}$

2tan-1 (x) = sin-1 $\frac{2x}{1+x^2}$ = cos-1 $\frac{2x}{1+x^2}$

MATRICES

A matrix is an ordered rectangular array of numbers or functions.

A matrix having m rows and n columns is called a matrix of order m × n.

[Aij] m * 1 is a column matrix.

[Aij] 1 * n is a row matrix.

An m × n matrix is a square matrix if m = n.

A = [Aij] m * m is a diagonal matrix if Aij = 0 when i ≠ j

A = [aij]n * n is a scalar matrix if a ij = 0, when i ≠ j, a = k, (k is some constant), when i = j.

A = [aij]n * n is an identity matrix, if aij = 1, when i = j, aij = 0, when i ≠ j.

A zero matrix has all its elements as zero.

A = [aij] = [bij] = B if (i) A and B are of same order, (ii) [aij] = [bij] for all possible values of i and j.

kA = k[aij]m * n = [k(aij)]m * n

– A = (–1)A

A – B = A + (–1) B

A + B = B + A

(A + B) + C = A + (B + C), where A, B and C are of same order.

k(A + B) = kA + kB, where A and B are of same order, k is constant.

(k + l ) A = kA + lA, where k and l are constant.

A = [aij]m * n and B = [bjk]n * p then C = AB = [cik]m * p where [cik] = $\sum_{j=1}^{n} a_{ij} b_{jk}$

(i) A(BC) = (AB)C, (ii) A(B + C) = AB + AC, (iii) (A + B)C = AC + BC

If A = [aij]m × n, then A′ or AT = [a ji ]n × m

(i) (A′)′ = A, (ii) (kA)′ = kA′, (iii) (A + B)′ = A′ + B′, (iv) (AB)′ = B′A′

A is a symmetric matrix if A′ = A.

A is a skew symmetric matrix if A′ = – A.

Any square matrix can be represented as the sum of a symmetric and a skew symmetric matrix.

If A and B are two square matrices such that AB = BA = I, then B is the inverse matrix of A and is denoted by A-1 and A is the inverse of B.

Inverse of a square matrix, if it exists, is unique.

Elementary operations of a matrix are as follows:

(i) Ri ↔ Rj OR Ci ↔ Cj

(ii) Ri ↔ kRi OR Ci ↔ kCi

(iii) Ri ↔ Ri + kRj OR Ci ↔ Ci + kCj

DETERMINANTS

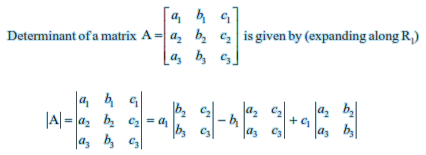

Determinant of a matrix A = $[a_{11}]_{1*1}$ is given by $|a_{11}| = a_{11}$

Determinant of a matrix A = $\begin{bmatrix}a_{11} & a_{12} \\a_{21} & a_{22} \end{bmatrix}$ is given by |A| = $a_{11} * a_{22} - a_{12} * a_{21}$

For any square matrix A, the |A| satisfy following properties:

|A'| = | A|, where A' = transpose of A.

If we interchange any two rows (or columns), then sign of determinant changes.

If any two rows or any two columns are identical or proportional, then value of determinant is zero.

If we multiply each element of a row or a column of a determinant by constant k, then value of determinant is multiplied by k.

Multiplying a determinant by k means multiply elements of only one row (or one column) by k.

If A = [aij]3 * 3, then | k . A | = k3|A|

If elements of a row or a column in a determinant can be expressed as sum of two or more elements, then the given determinant can be expressed as sum of two or more determinants.

If to each element of a row or a column of a determinant the equimultiples of corresponding elements of other rows or columns are added, then value of determinant remains same.

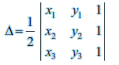

Area of a triangle with vertices (xi, yi) , (xj, yj) and (xk, yk) is given by

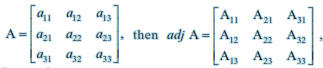

Minor of an element aij of the determinant of matrix A is the determinant obtained by deleting i th row and j th column and denoted by Mij

Cofactor of $a_{ij}$ is given by $A_{ij}$ = $(-1)^{i+j}M_{ij}$

Value of determinant of a matrix A is obtained by sum of product of elements of a row (or a column) with corresponding cofactors. For example, $|A| = a_{11}A_{11} + a_{12}A_{12} + a_{13}A_{13} $

If elements of one row (or column) are multiplied with cofactors of elements of any other row (or column), then their sum is zero. For example, $|A| = a_{11}A_{11} + a_{12}A_{12} + a_{13}A_{13} = 0$

In below image $A_{ij}$ is co-factor of $a_{ij}$

A (adj A) = (adj A) A = | A| I, where A is square matrix of order n

A square matrix A is said to be singular or non-singular according as | A| = 0 or | A| ≠ 0.

If AB = BA = I, where B is square matrix, then B is called inverse of A. Also A -1 = B or B -1 = A and hence (A -1 ) -1 = A.

A square matrix A has inverse if and only if A is non-singular.

A-1 = $\frac{1}{|A|} (adj A)$

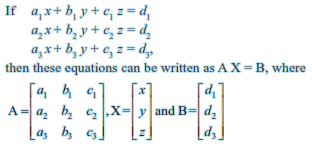

Unique solution of equation AX = B is given by X = A -1 B, where |A| ≠ 0

A system of equation is consistent or inconsistent according as its solution exists or not.

For a square matrix A in matrix equation AX = B

| A| ≠ 0, there exists unique solution

| A| = 0 and (adj A) B ≠ 0, then there exists no solution

| A| = 0 and (adj A) B = 0, then system may or may not be consistent.