Filesystems and the VFS

There's something known as the VFS, or Virtual File System layer, which stands between the actual filesystem and the applications that want to use files.

These applications do not need to know about what kind of filesystem the data's on or what the physical media is or the hardware

So, whenever an application needs to access a file it makes a call to the virtual filesystem, and then that is translated into methods that the actual filesystem and hardware can use.

Linux does this in a very robust way and it works with more filesystem varieties than any other operating system. And this has really helped contribute to the success of Linux, because it makes it relatively easy to move to Linux from other operating systems.

Now, some filesystem types are sufficiently different from Linux native ones or Unix ones, in general. And require more manipulation in order to be worked within Linux.

For instance, VFAT which comes from Microsoft, doesn't have read/write execute permissions for owner, group and world, the three types of entities that standard Unix filesystems have to deal with.

So, you have to make assumptions about how to specify the permissions for these three different types of entities. And, you also have to specify who owns the files, etc.

Also, the way metadata or information about the filesystem as a whole is stored is quite different.

VFAT has an FAT, a File Allocation Table at the beginning of the disk, rather than in the actual directories. And it's less secure and more prone to failure

Newer filesystems have what is known as journaling capabilities.

Now, the native filesystems that arose in Linux are the ext2, ext3 and ext4 variants which have succeed each other in turn. They all have utilities for formatting the filesystem, for checking the filesystem.

You can reset a lot of parameters and tune them after creation or tune them when you first format a partition for the filesystem. These days people generally use ext4.

The internal kernel drivers are now all ext4, but they can run in an ext3 mode

Journaling filesystems recover quickly from system crashes or if you have a bad shut down such as a power cord goes out or something, and you can do that with little or no corruption.

So that when the filesystem reboots, you only have to check the most recent transaction that was going on when things went bad rather than check the entire filesystem. So, journaling filesystem work with what are known as transactions.

They have to be completed without error, what we call atomically. In other words, either they happen or they don't. They're never left in an uncertain state.

So, these are the journaling filesystems easily available on the Linux ext3, ext4 pretty dominant

Reiser was actually the first journaling implementation in the Linux kernel, but it no longer is being maintained then there's no development on it for complicated reasons.

JFS came over from IBM, XFS came over from SGI and btrfs or Butter FS or B TRee filesystem is the most recent that is native to Linux and has a lot of advanced features.

XFS is actually the default filesystem now on Red Hat Enterprise Linux systems. Btrfs is actually the default filesystem on SUSE systems. So, the main development in Butter FS was shepherded by Chris Mason. It has been around for quite some time, it has some very advanced capabilities.

It can take frequent snapshots of the entire filesystem and then you can rewind back to it or you can do that even for sub-volumes or sections of the entire filesystem and do that almost instantly.

And it does that without wasting much space, because it uses COW or copy-on-write techniques.

So, you only have to keep extra copies of things which change not the entire filesystem.

It has its own internal framework for adding and removing new partitions and physical media, so you can shrink or expand filesystems, much as LVM (Logical Volume Management) does

There's still some debate about whether Butter FS is suitable for day-to-day use in critical filesystems.

However, SUSE and some embedded systems are actually using it as the default filesystem. So, in at least many people's opinion, it is quite stable and can be used everyday in critical situations.

Using Available Filesystems

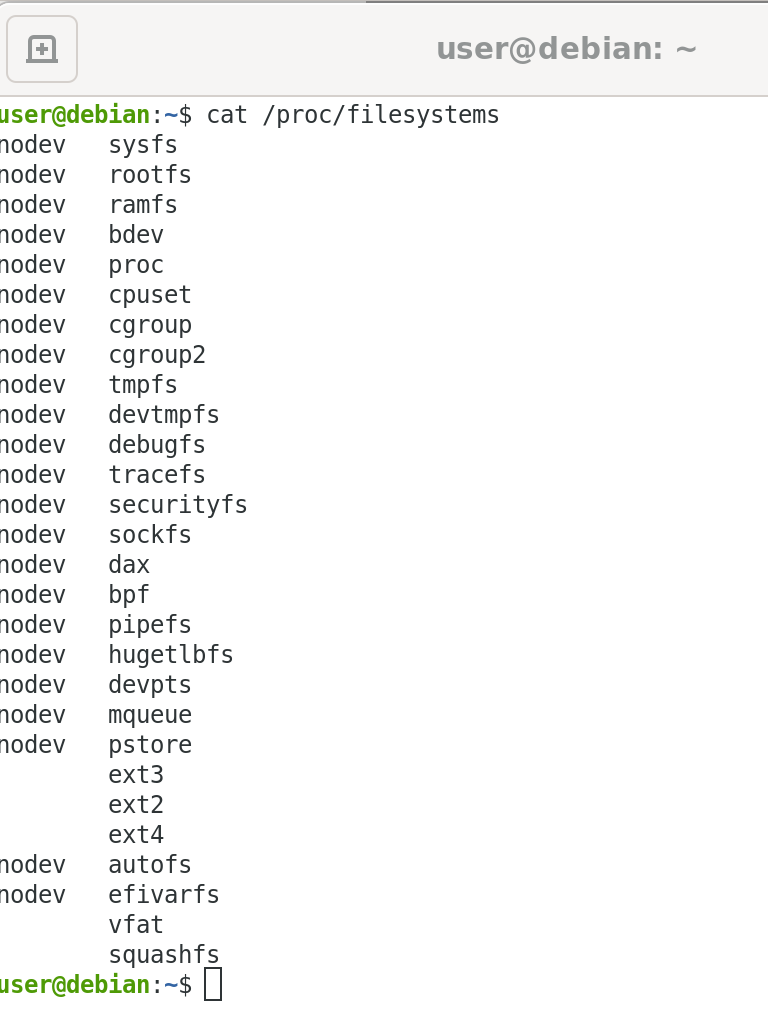

At any given time, you can see what filesystems your system currently understands by doing "cat /proc/filesystems".

The "nodev" ones are so-called pseudo-filesystems, but then, we see more conventional ones down here, like "ext3", "btrfs", "fuse", "ext4", etc.

You will notice that "xfs" was not on the list, and the kernel would understand how to do it if we were to load it as a module, but it is not currently built into the system.

Mounting Filesystems

In UNIX-like operating systems, all files are arranged in one big filesystem tree rooted at /.

Many different partitions on many different devices may be coalesced together by mounting partitions on various mount points, or directories in the tree.

The full form of the mount command is:

$ sudo mount [-t type] [-o options] device dir

In most cases, the filesystem type can be deduced automatically from the first few bytes of the partition, and default options can be used, so it can be as simple as:

$ sudo mount /dev/sda8 /usr/local

Most filesystems need to be loaded at boot and the information required to specify mount points, options, devices, etc., is specified in /etc/fstab:

Note that in this example, most of the filesystems are mounted by label; it is also possible to mount by device name or UUID; the following are all equivalent:

$ sudo mount /dev/sda2 /boot

$ sudo mount LABEL=boot /boot

$ sudo mount -L boot /boot

$ sudo mount UUID=26d58ee2-9d20-4dc7-b6ab-aa87c3cfb69a /boot

$ sudo mount -U 26d58ee2-9d20-4dc7-b6ab-aa87c3cfb69a /boot

The list of currently mounted filesystems can be seen with:

$ sudo mount

/dev/sda5 on / type ext3 (rw)

proc on /proc type proc (rw)

sysfs on /sys type sysfs (rw)

devpts on /dev/pts type devpts (rw,gid=5,mode=620)

/dev/sda6 on /RHEL6-32 type ext3 (rw)

/dev/mapper/VGN-local on /usr/local type ext4 (rw)

/dev/mapper/VGN-tmp on /tmp type ext4 (rw)

/dev/mapper/VGN-src on /usr/src type ext4 (rw)

/dev/mapper/VGN-virtual on /VIRTUAL type ext4 (rw)

/dev/mapper/VGN-beagle on /BEAGLE type ext4 (rw)

tmpfs on /dev/shm type tmpfs (rw)

debugfs on /sys/kernel/debug type debugfs (rw)

/dev/sda1 on /c type fuseblk (rw,allow_other,default_permissions,blksize=4096)

/usr/local/teaching/FTP/LFT on /var/ftp/pub2 type none (rw,bind)

/ISO_IMAGES/CENTOS/CentOS-5.5-x86_64-bin-DVD-1of2.iso on /var/ftp/pub

type iso9660 (rw,loop=/dev/loop0)

sunrpc on /var/lib/nfs/rpc_pipefs type rpc_pipefs (rw)

/dev/sda2 on /boot type ext3 (rw)

If a directory is used as a mount point, its previous contents are hidden under the newly mounted filesystem. A given partition can be mounted in more than one place and changes are effective in all locations.

You can also mount NFS (Network File Systems) as in:

RAID and LVM

Let's consider how Linux makes use of RAID, Redundant Array of Independent Disks, and LVM, Logical Volume Management.

RAID is a Redundant Array of Independent Disks. The idea is, instead of just having one disk, you spread your input/output over multiple disks or spindles.

A lot of modern disk controller interfaces allow parallel transport to each of the devices, so that you can actually get more throughput, because you can work on more than one disk at once.

Now, this can be implemented in software and this is a very mature part of the Linux kernel, the software implementation, or generally better in hardware that the RAID controls built right into the motherboard, let's say, on a computer.

If you know that you have that, it's probably a better quality and efficiency than using software RAID.

When you use the hardware implementation, the operating system doesn't even really have to be aware that it's using RAID. It's very transparent, and so you don't have to write any of your software in any kind of different way.

Now, three essential features of RAID are:

mirroring, you write the same data to more than one disk. So, if one of the disks were to go bad, you would be able to recover what you were doing anyway.

Striping, that's writing data to more than one disk. That's what we were talking about when we talked about using multiple spindles.

And parity is storing some extra data that lets you figure out if something has been corrupted or is a little bit wrong, so you can repair it.

so you get some fault tolerance. There are various levels of RAID, from zero to five. As you go up in level, it gets more complex.

Generally, you need to use more storage, more disk, let's say, but you also get much better protection and double coverage and redundancy as you go up.

Logical Volume Management is a different beast. Here, instead of just having one physical partition that you would store a filesystem in, you can have the actual physical partitions broken up into a series of small what are called extends.

Which can be grouped together to form what are called logical volumes, which look like a partition to the application or many of the utilities on the system, but it really can be split up in a lot of different places.

One of the advantages to using LVM is it becomes really easy to change the size of these partitions and filesystems. With regular physical partitions, it can be rather tedious and difficult to expand or shrink the size of a partition.

You can easily add new physical volumes to an LVM, as it gets required, or you can remove them as well. So, there's a lot more flexibility here, etc.

It does mean that some of the data will be split up in various locations, but as people get away from rotational disks and start using SSDs, it becomes less important that data be adjacent to each other.

Using LVM

Let's get some practice examining our LVM setup, and doing things like creating a new logical volume, and putting a filesystem on it, etc.

So first, let's see what physical volumes are in use in our system. And we can do that with:

sudo pvdisplay | grep sd

And you'll see the different hard disk partitions which are being used as physical volumes, and being used in the volume groups.

So, may we see that we have both "sdb" and "sda". On this system, "sda" is an old-fashioned rotational hard disk. "sdb", as in "SSD", which is much faster, so we try to put high-performance sensitive data on "sdb".

If I just type

sudo pvdisplay

I get information about each volume on the system. So, you'll see exactly how big each one is. For instance, "sda2" is 500 gigabytes in size. And the extents, or the units in which the physical volume is trunked up are 4 megabytes, which is what's called a "PE".

If I want to see what volumes are on the system, I can do

sudo vgdisplay

And you'll see, there are volume groups "VG" and "VG2".

If I want to see what logical volumes I have on my system, I could do

sudo lvdisplay

And those are the different logical volumes that are on the system. If I just try "sudo lvdisplay", I get details about each logical volume that's on the system.

Loopback filesystems

Linux systems often use loopback filesystems, in which a normal file is treated as an entire filesystem image.

First, create an empty file by doing:

$ dd if=/dev/zero of=/tmp/part count=500 bs=1M

which will create an empty 500 MB file named /tmp/part. You can adjust the size if you are short on space.

You can then put an ext3 filesystem on the file by doing:

$ mkfs.ext3 /tmp/part

which you can then mount by doing:

$ mkdir /tmp/mntpart

$ sudo mount -o loop /tmp/part /tmp/mntpart

$ df

Filesystem Type 1K-blocks Used Available Use% Mounted on

/dev/sda5 ext3 10157148 6238904 3393960 65% /

....

/tmp/part ext3 495844 10544 459700 3% /tmp/mntpart

Once it is mounted, you can create files on it, etc., and they will be preserved across remount cycles.

You can check the filesystem by doing:

$ sudo umount /tmp/mntpart

$ fsck.ext3 -f /tmp/part

and get additional information by doing:

$ dumpe2fs /tmp/part

and change filesystem parameters by doing:

$ tune2fs /tmp/part

For example, you could change the maximum-mount-count or reserved-blocks-count parameters.

Loopback filesystems have lower performance due to having to pass through the actual filesystem, but are still quite useful.